How to see old Website Versions

The internet is eternal - many are certain of this. But this is by no means true for individual websites. Why does your website crash, so that all your content is lost? Or did you want to visit your favourite website and it just didn’t work today? Some may also look for a post that they had read a few days ago, but is now completely untraceable - so there are many possible reasons why you want to find old websites. But deleted means gone forever! Or does it?

Even if the original page has been deleted, there is a way to find its contents again, because some organisations create images of old internet sites. With the help of modern technology, they continuously collect snapshots, and then make these available for internet users for free. The most famous project of this kind is the Wayback Machine. This service of the Internet Archive Project archives large parts of the publicly accessible world wide web - and has done so since 1996. In the following, we will explain the functional principle of the Wayback machine and also present two alternatives with which you can also view websites of the past.

- Free website protection with SSL Wildcard included

- Free private registration for greater privacy

- Free 2 GB email account

The Internet Archive Project: Old internet sites, pictures, videos, and texts

Brewster Kahle sold his first own company, the search service WAIS, to AOL in 1992 for $15 million. With this capital he founded both a new company, and a non-profit organisation. The company was Alexa-Internet, which he sold to Amazon.com a few years later for an impressive $250 million. As a result, he now had even more financial resources at his disposal, several million of which he put into his non-profit project: the Internet Archive.

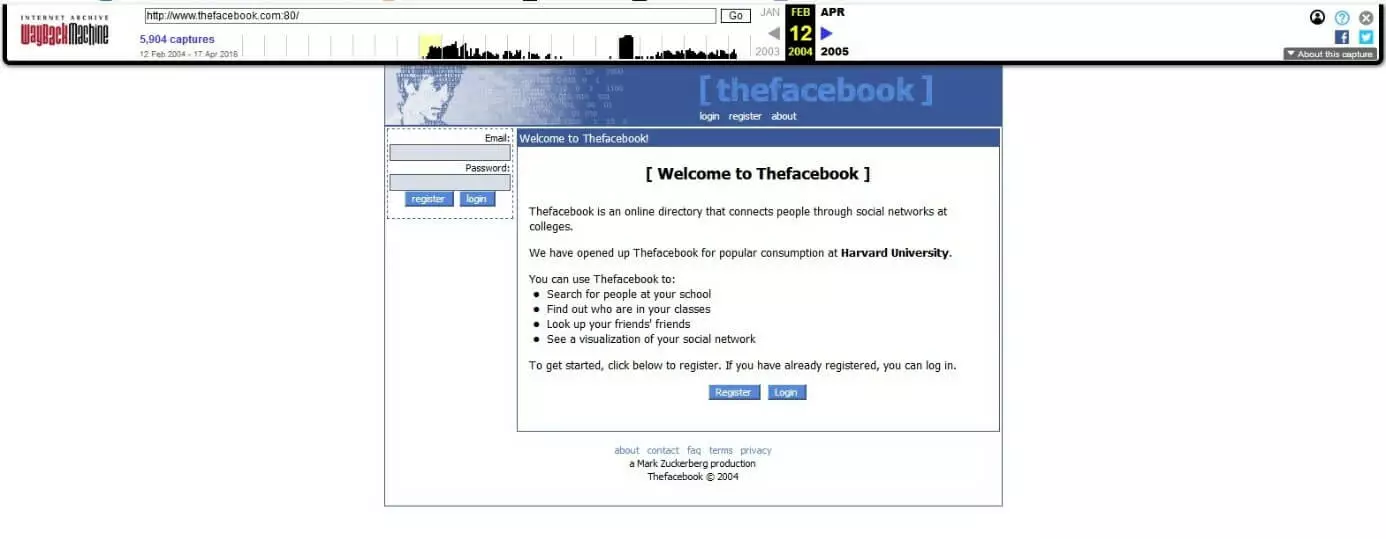

As part of this project, the so-called Wayback Machine was also developed. A web archive in which you can find screenshots of old homepages from different periods of time. In the following picture you can see, for example, what the Facebook homepage (at that time still “Thefacebook”) looked like on 12 February 2004 - eight days after the website went online for the first time.

As the name suggests, the project Internet Archives originated as a web archive. When Brewster Kahle first created the archive in 1996, he used the data from his Alexa Internet project, which collected website hits from domains across the internet. Alexa is now offered by Amazon as a marketing analysis service. Initially, popular websites were prioritised for the internet archives. According to a study by Forbes magazine from 2015, however, the number of snapshots of a website on archive.org (the project's website) does not always correlate with the Alexa rank or update frequency of a domain. So we’re in the dark for the time being as to which selection methods the project uses exactly.

Viewing more than just websites of the past - what else the internet archive has to offer

The Internet Archive has achieved a great deal in its twenty-year history. The website archive has become a huge virtual library in its own right. According to its own information, archive.org used a massive 18.5 petabytes of storage space for individual content in 2015 (a total of 50 petabytes, i.e. 50 trillion bytes) and has grown by several terabytes every week since then. According to the latest surveys, you can access around 327 billion old versions of websites via the Wayback Machine. In addition, the project collects:

- Texts and books (around 16 million)

- Audio recordings (about 4.4 million, including 189,000 live recordings of concerts)

- Videos und TV productions (about 5.8 million, of which about 1.6 million are news records)

- Images (around 3,1 million)

- Software programs (around 209.000)

(Updated: April 2018)

A lot of the content comes from universities, government organisations such as NASA, from text digitisation projects such as Project Gutenberg or Arvix, and also of film and audio collections like the Prelinger Collection, or the live music archive Etree.

Brewster Kahle is a net activist who is not only committed to a free Internet, but generally to freely accessible knowledge. He was one of the most popular opponents of the so-called “mickey mouse protection act” (the actual name is the “copyright term extension act”), which was supported by Disney. This law led to an extension of copyright law in the States. From now on, works are protected by copyright for up to 70 years (and not - as before - 50 years) after the death of an author or creator. According to Kahle, property rights this lengthy would only benefit the richest companies, while the works would not be usable by the general public.

In 2007, the State of California officially recognized the Internet Archive as a library. One of the many computer centres that store backup copies of the archive is located in the Bibliotheca Alexandria, newly opened in 2002 under UNESCO patronage.

The daughter website archive-it.org works with numerous scientific organisations that want to digitally archive their collections.

Find old versions of websites: Reasons to archive

The internet is constantly changing. Faster data transmission creates new services, while others are becoming obsolete and forgotten. New information often replaces the old or outdated, especially in news portals and other current websites. The older articles and web pages are, the less likely they are to be visible. Nevertheless, users often wish to be able to view past versions of a website. The wish to find an old version of a website can come from pure nostalgia. For example, if you ask yourself what you wrote in your Myspace profile way back when. However, there are economic or legal reasons to want to track down old versions of websites too:

- Your site is unexpectedly offline: Perhaps the hosting service is experiencing technical problems, or the money for the monthly fees was not transferred. You can probably find the lost content in a web page archive.

- You are a journalist, blogger or scientist working on an article: The fastest way to find important sources is via the internet. However, if the source pages you link to change, your readers won’t be able to find the information they need, or the quotations you’re using will no longer match the content of the linked page. If you quote the source with a snapshot and timestamp, your readers can always trace the source.

- You utilise SEO and use the link-power of older domains: In addition, you can use archive tools to remove incorrect links or adjust page changes. Some SEO experts improve their ranking with archived content in private blog networks.

- You need legal evidence: When it comes to insults or threats on the net, screenshots help to document personal attacks. If the texts have already been deleted by the originator, simply use an earlier version of the website to collect your evidence. Furthermore, the documentation of work processes via an archive can also be useful in patent disputes.

The Wayback Machine Tutorial: find old versions of websites in 3 steps

Are you running a website and you are missing a backup copy? Save lost content by finding screenshots of your old homepage via archive.org. Old websites can be found in just three steps.

A snapshot is always captures something at a certain time. It describes the current state of systems or objects - such as a website. Connections between subareas remain, but the system does not change its state. Therefore, it is possible to navigate through old web pages on archive.org, but dynamic elements such as forms lose their function in a screenshot.

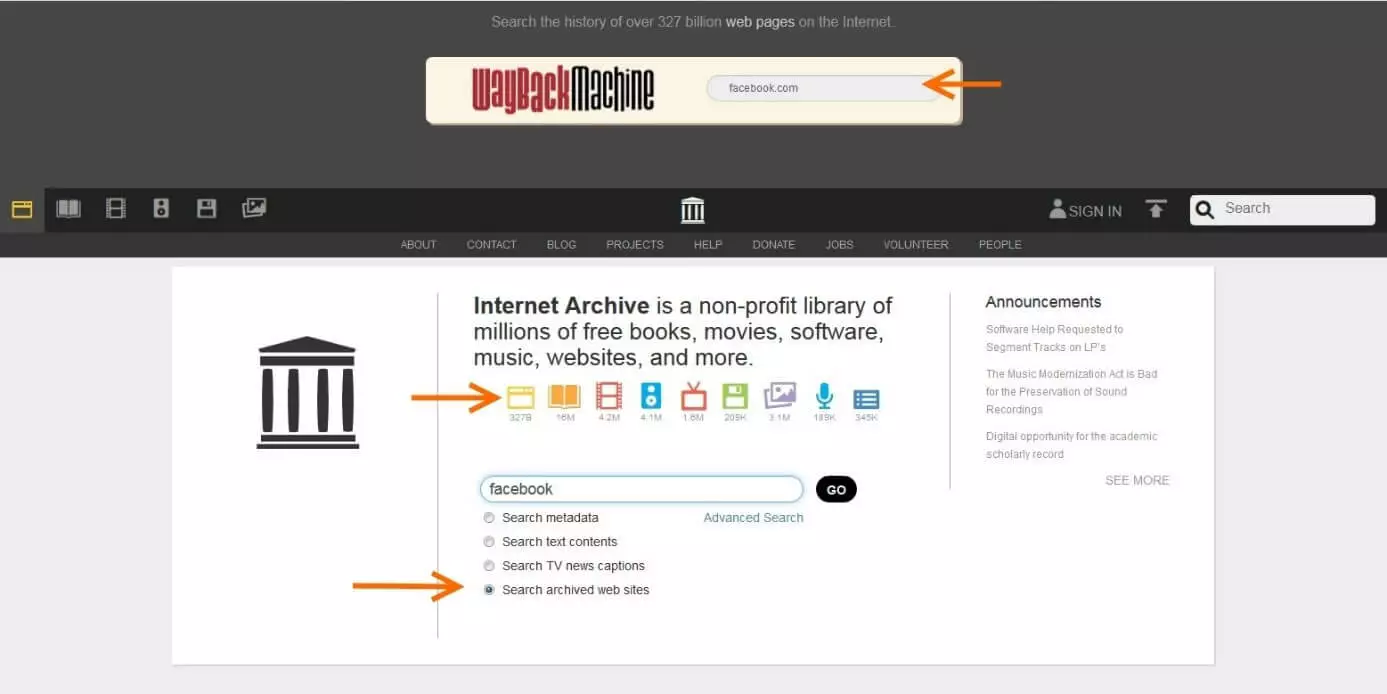

- Enter archive.org in the search bar. The Wayback Machine provides three possibilities to view old versions of websites:

- Enter the URL you want to find directly into the upper Wayback Machine search bar, as shown in the image below. Press the Enter key to go directly to the results page.

- Click on the yellow web icon to get to the Wayback main page. There you can enter a domain URL or try out other functions. To access an archived website, enter the URL and click on “browse history.”

- Enter a search term in the search bar below and select “search archived web sites”. Click on “go” to see the list of domains and website descriptions that contain the search term. The individual entries show the domain name, the description and the number of snapshots in a certain period. You also receive information about the number of media contents captured. Click on the result you want.

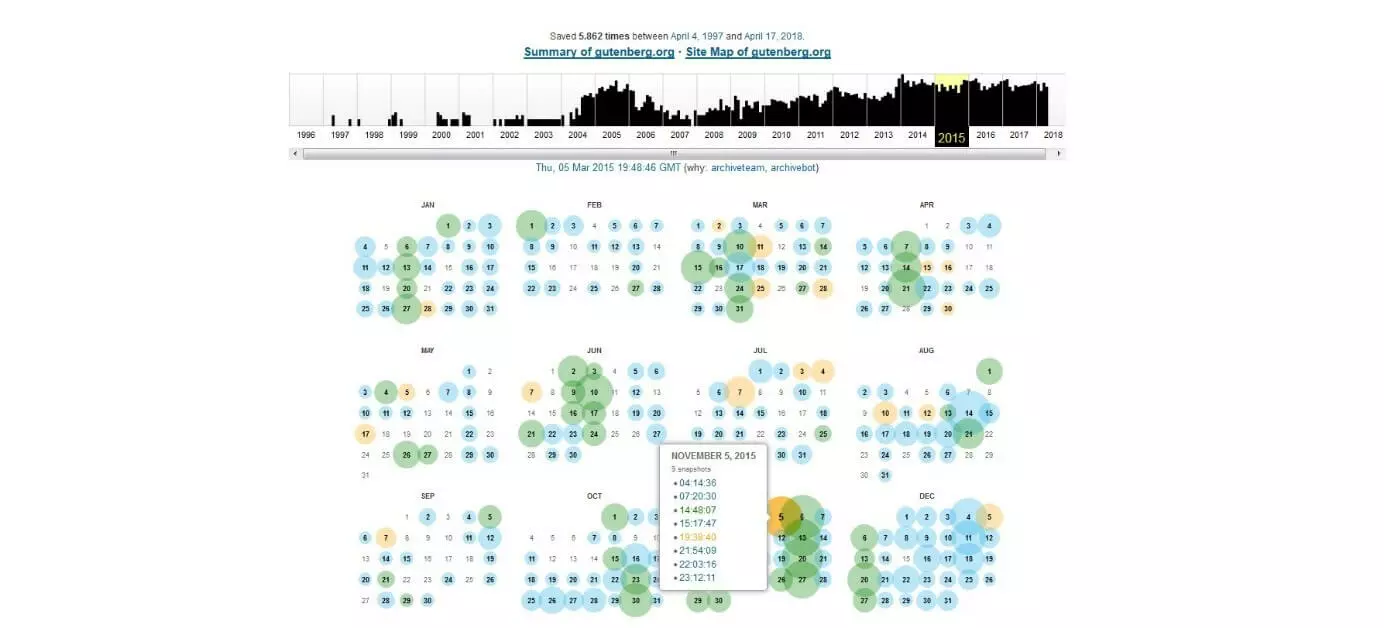

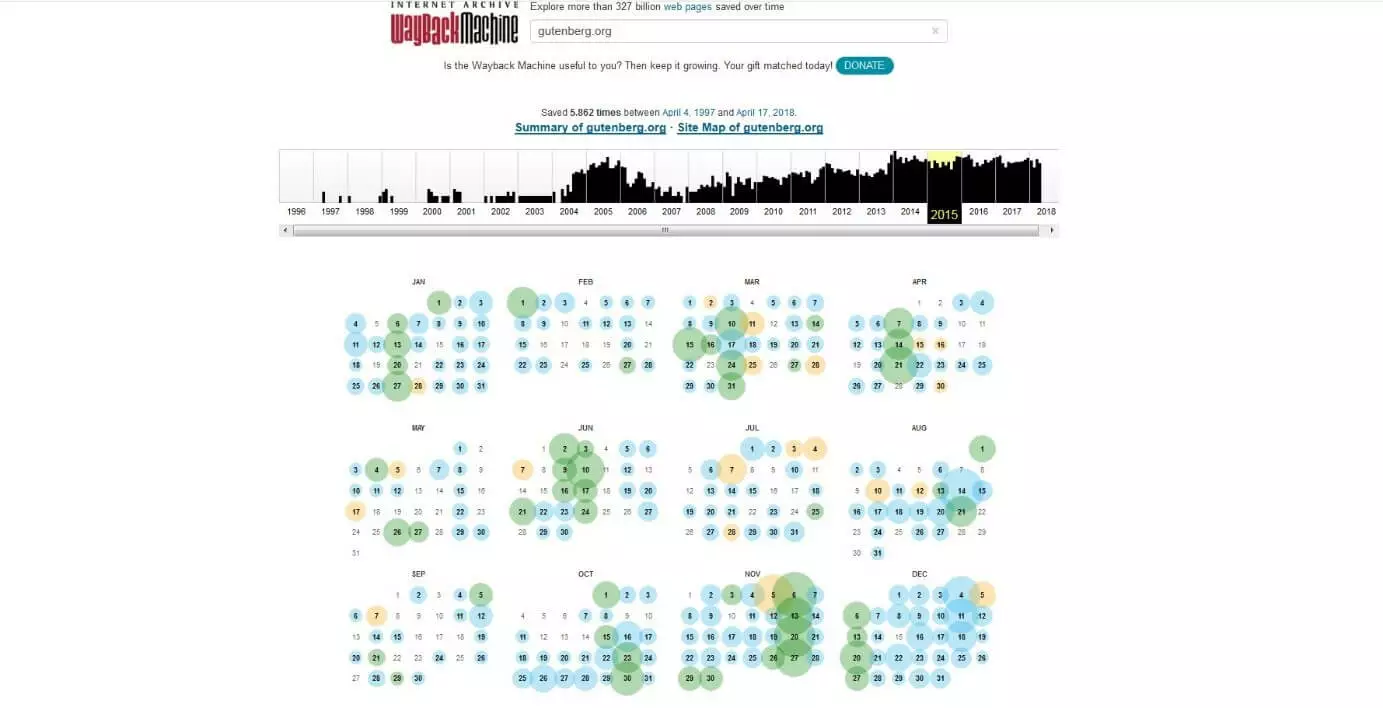

- On the main page for the URL you entered (gutenberg.org in the example below) you will see a timeline. This forms the lower axis of a diagram, in which a black column is assigned to each date. The height of each column in the bar chart indicates how often the Wayback Machine crawlers scanned the domain on that date. If no column is visible, no screenshots were taken at all on that date. In 2007, for example, there were very few snapshots per month. The noticeable gap indicates that no snapshot was taken in November. The size of the circles in the calendar sheet shows how often the crawlers recorded the old internet page on the day in question. The key is as follows:

- Blue for a successful crawl via the webpage

- Green for redirects

- Orange for a URL not found (error 4xx)

- Red for a server error (error 5xx)

Dominik Bruhnshutterstock

Dominik Bruhnshutterstock-

Select a day when the old web page was recorded in a screenshot. Records only exist for the days with a coloured circle. Click directly on the date to see the snapshot of the page. If you hold the mouse pointer over the date, the different timestamps appear (as shown in the picture below) – these show the exact times a snapshot was taken.

Clicking on the timestamp takes you to the screenshot of the archived website, which shows how the website looked at the specified time. For example, timestamp 19:38:40 (orange) causes error 403, while timestamp 21:54:09 displays the entire page.

Within the archived website you navigate as usual via links to sub-pages. Texts can be copied easily. If you also want to save layout and design, screenshots are also possible.

The name Wayback Machine is inspired by an American cartoon from the 60s. The characters Mr. Peabody and Sherman travel in "Mr Peabody's Improbable History" with a time machine through the story they call "WABAC-Machine".

The options “summary of...” and “site map of...” (in the upper picture directly above the timeline) offer more possibilities. The summary shows how many code files, images and flash files the crawlers found. The sitemap, on the other hand, displays the entire domain as a circle. A circle section stands for a web page that you can access directly with one click.

View websites of the past later thanks to the self-snapshot feature

Do you run a website or blog or publish your work through a third party? Then you can use the Wayback Machine to back up your content. The Wayback algorithm does not automatically cover the entire internet. There are several reasons why archive.org does not archive some web pages or does not display certain content:

- The site operator does not allow the website to be indexed. (Command: noindex)

- The robots.txt specifies that the website or parts of it should not be indexed.

- The website is password protected.

- The site operator has personally asked to remove the website from the archive.

- Dynamic elements are a large part of the page and they are not displayed correctly.

So if you want to archive your own website, you have to make sure that the archive crawlers can read the domain. To do this, look at the following guide:

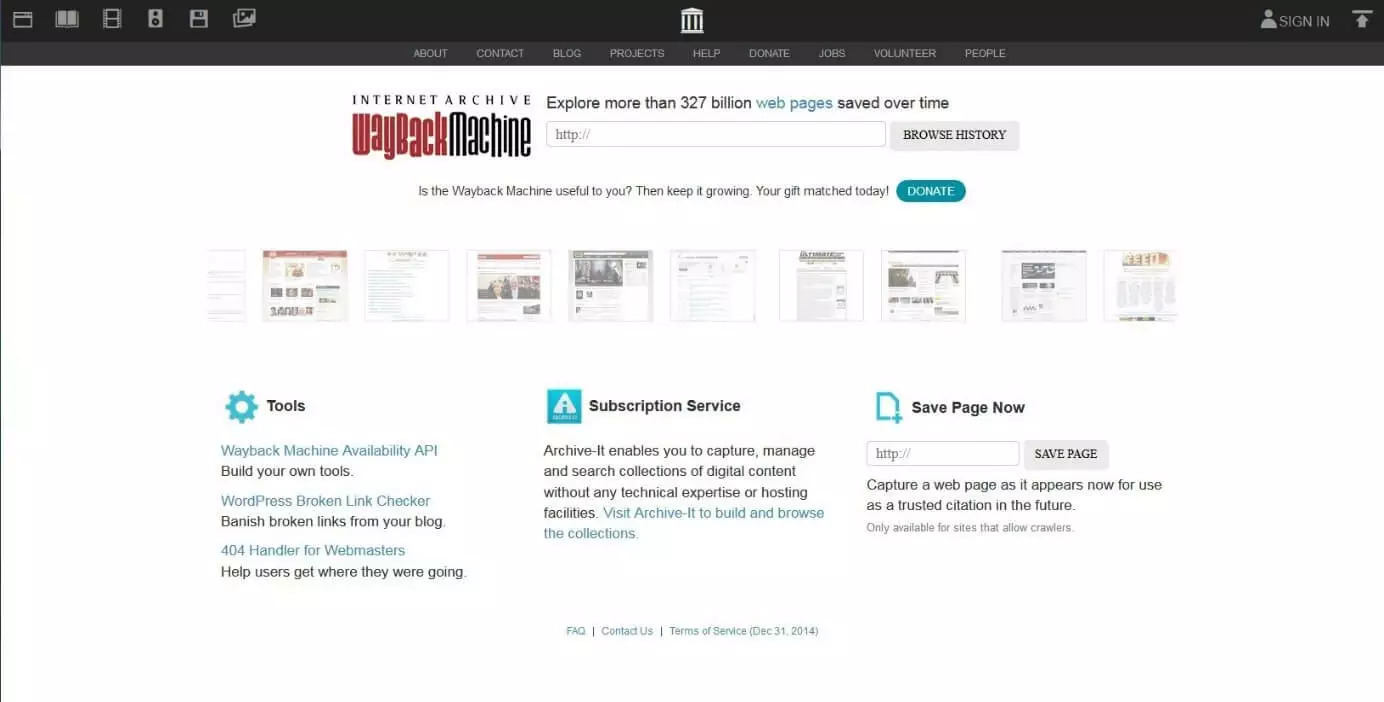

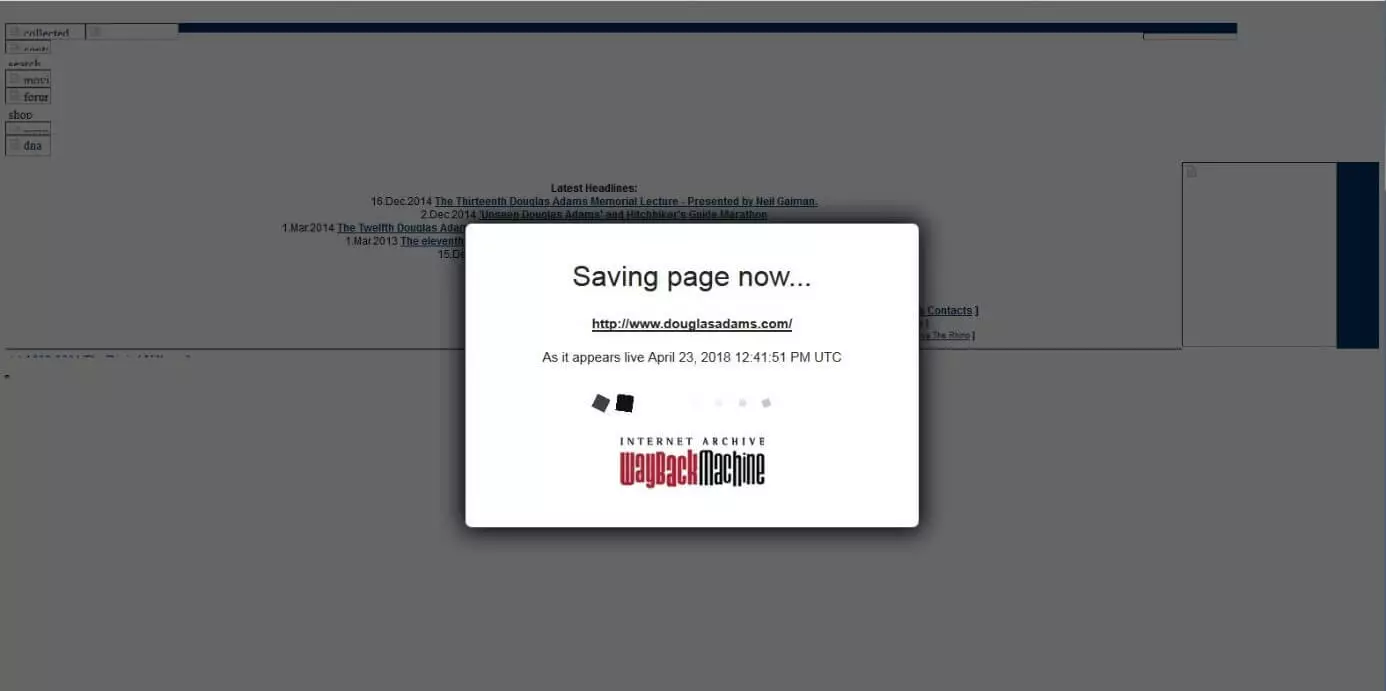

- Visit the Wayback-Machine main page again (shown below). A scroll bar shows you a website in the past that might be of interest to visitors. Below you will find helpful tools, the subscription service for scientific institutions and the Save-Page-Now tool.

- If you want to take a snapshot of a website, you only need to know the domain URL. Now enter it in the input field under “save page now”. The domain address in simple form is sufficient. For the example in the picture below this would be: “douglasadams.com”.

- The small window “saving page now...” pops up in front of the loading website. Once the process is complete, you will see a snapshot of your website. Now you have secured all contents and links for the future.

Since the archive crawlers often don't know smaller websites, it is especially worthwhile for smaller, less well known websites to take regular snapshots themselves.

Wayback machine downloader for restoring old web pages

With the assistance of the Wayback Machine, you’ll be able to access old web pages that are no longer accessible via their previous URL. This way you can at least find and save the text content of the page you need. But sometimes you need more than just an old article text. Sometimes the problem is bigger. Maybe the page doesn't exist anymore and the back-up doesn't help either. Perhaps you want to download the entire website to edit or save the source code, filter out broken links, or test the old version of your website for SEO optimisation. This is all possible with a Wayback-Machine-Downloader.

There is an open source downloader, namely the Wayback Machine Downloader on GitHub. You should install Ruby first. But you don't have to be a Ruby professional to use the program. The developers list the most important code commands directly on the download page. Enter the required URL and the program will download the corresponding files to your computer. It automatically creates index.html pages that are compatible with Apache and NGINX. Advanced users can define the settings for timestamps, URL filters and snapshots in more detail.

The web-based tool Archivarix is suitable for small websites or blogs thanks to its clearly structured user interface. The service is free of charge if it is used for websites with less than 200 files, otherwise it is charged. You must register to use Archivarix. Then simply enter the desired domain and define the optimisation options and link structures with a few clicks. Then enter your e-mail address. If the download from the Internet site archive is complete, Archivarix sends a zip file to this address.

Archive.org itself does not offer a website downloader. However, as a library member, i.e. logged in user, millions of texts, images and audio files are available for download. If you own the rights to something, you can upload it for public non-commercial use, as NASA does with much of its audio and visual material. For example, the following video, filmed by the ISS, archived it as a common work under license of Creative Commons.

The team of the Open Library project wants to categorise books as comprehensively as possible. It also allows users to borrow many books - some of which are hosted by third parties - for two weeks. In a separate category you will find e-books and texts from the Internet Archive. These can usually be downloaded free of charge.

It's not that expensive. For the cost of 60 miles of highway, we can have a 10 million-book digital library available to a generation that is growing up reading on-screen. Our job is to put the best works of humankind within reach of that generation. Brewster Kahle: How Google Threatens Books, Washington Post, 5/2009

Alternative 1: Find websites that are not quite as old - with Google search

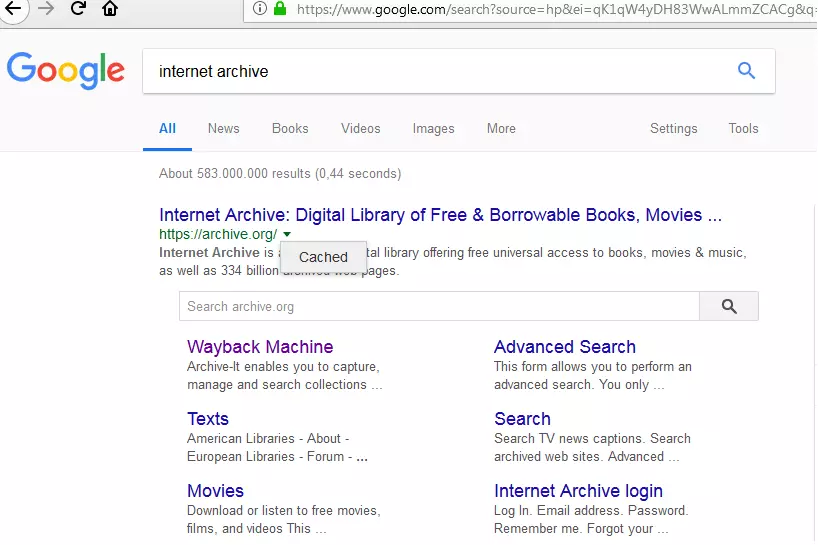

Is the information you are looking for not all that old? Then a simple Google search may help: Just like the Wayback Machine, Google uses crawlers to scan and index websites. To do this, Google takes a snapshot of the entire website. If this has changed since the last crawl, Google caches the snapshot of the old web page version. The new snapshot serves as a current preview. If the live website fails for a short time, there will be no bottlenecks, as there is still a version in the cache. So there is only one timestamp of the cached page. However, this can be more up-to-date than a snapshot of the Internet archive. If archive.org does not have an old version of a website of this domain, Google may even be the only way to find a screenshot of the site.

To view the latest version of your website, simply enter it as a search term on Google. The URL should appear under the title of the page in the results list. If you click on the arrow to the right, a small drop-down menu appears (as shown in the picture below). If you click on “in cache”, Google loads the website for you in the version before its last update.

Sometimes it happens that the current version of a page is not listed in Google's results list. This can happen if the site operators have set the domain to "noindex". This indicates that the search engine should not include the page in its collection. However, you may still find an old version of the website in the cache. If you want to visit an old version of a website but can't find it in the search results, enter the following in your address bar:

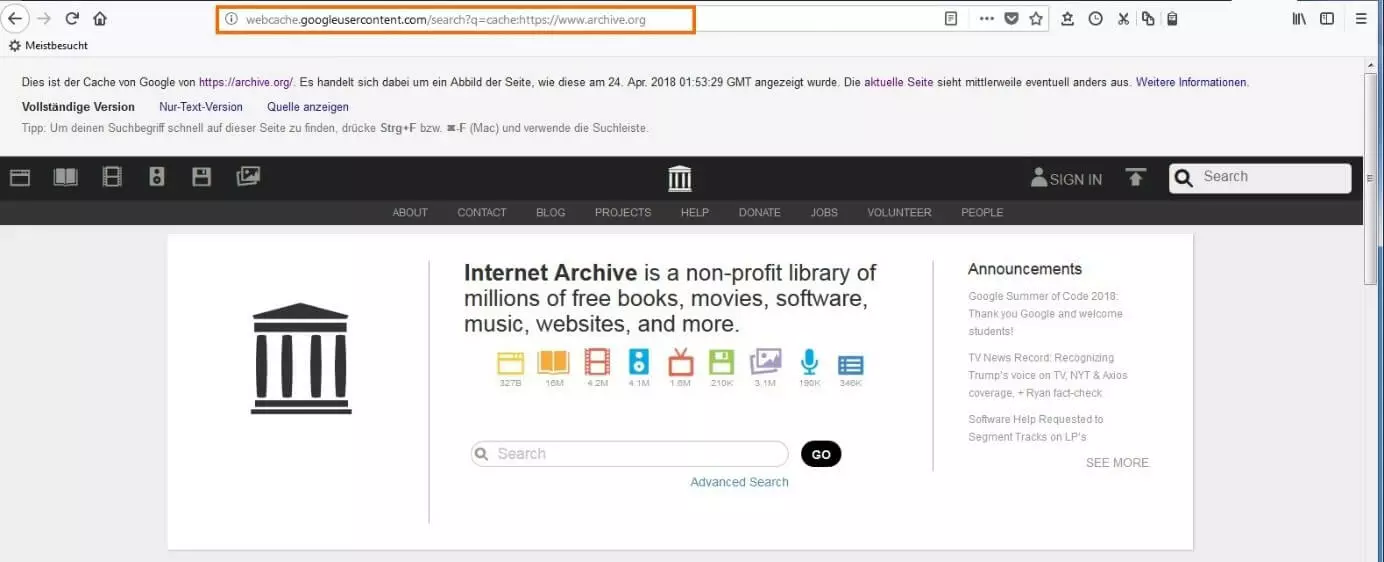

http://webcache.googleusercontent.com/search?q=cache:https://www.DOMAIN.comIn the above example, “DOMAIN.com” is a placeholder for the URL you are looking for. The image below shows the cache version of archive.org as Google captured the website on April 24, 2018. Note that even Google snapshots do not display dynamic elements and media content for the most part.

Alternative 2: Finding references to old websites with WebCite

Journalists, bloggers and academics are increasingly using online sources. And just as you list your sources in a bibliography for scientific print publications, many online texts also contain references. These are usually, in the form of links that lead directly to the internet source used. However, since web pages can change or be removed from the web, there is a risk that these links will no longer lead to the appropriate texts. If readers follow an outdated link, they may see something completely different from the author's research at the time. To prevent this, the organisation WebCite® offers an archive service. This allows you to save sources as snapshots and generate source information that your readers can use at the same time. With the help of a target link or the snapshot ID, they can then directly view the source.

How to archive your sources with WebCite:

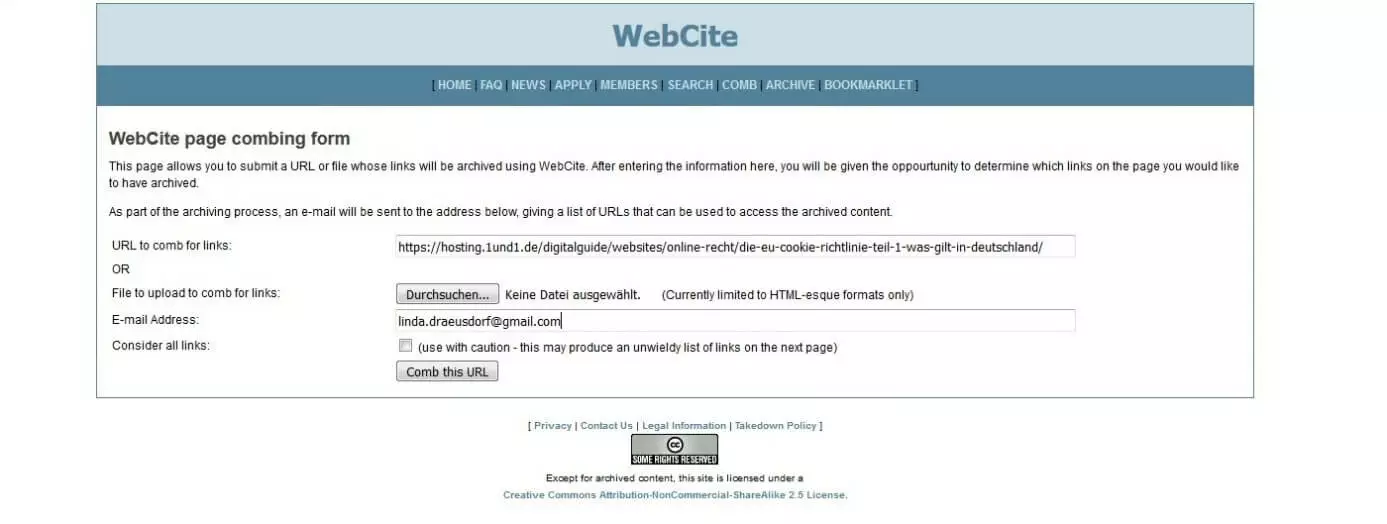

- On the WebCite main page, directly under the domain name, you will find the main menu. Select the tab “comb”.

- The form for archiving (“archive form”) appears. If your document is already on the web, enter the URL - as shown in the picture below - in the first search field (“URL to comb for links”). If the text has not yet been uploaded, but the references with links already exist, simply upload the file. To do so, click on “browse”. Enter your email address and WebCite will send you a list of archived snapshots URLs later. Click on “comb this URL”.

- After a short wait, the website displays a list of possible links. Select your sources by checking the box next to them. Click on the button "Cache these URLs" at the end of the list.

- The message that your sources are in the queue for archiving now appears in the window. Besides the original link, you will also receive the link to your snapshot. Simply include this in your source citation. This allows your readers to access the same version of your source that you used for your work - even years later, when the old website no longer exists.

If you publish your texts on a platform with many outgoing links, the WebCite crawlers will include them in their selection. This list therefore quickly becomes unmanageable. In this case, we recommend uploading the document directly from your hard disk.

If you only want to archive one source or your own work, simply use the archive tool for this purpose. To do this, click on the “archives” tab in the main menu. In the form for single sources, enter the URL of the source to be cited as well as your e-mail address and the archiving language. When you fill in the metadata (title, author, etc.), WebCite creates a reference. If metadata already exists on the webpage, the program can also add it. Click on “submit”. You will then receive an email with the snapshot link and the source.

This allows you to specify an old web page as an unchangeable source:

- Click on the “search” tab in the main menu. The search form appears.

- To search directly for old versions of websites, enter the domain URL in the first input field (next to “URL to find snapshots of”) as shown in the image below. Below this, enter the timestamp in the notation YYYYYMM (Y=Year, M=Month). If you do not do this, you will be directed to the cached domain by clicking on “search”, but the WebCite header with which you can jump between timestamps is missing.

- Instead of searching the URL, you can specify the snapshot ID directly to go to a version of the Web site saved at a particular time.

Those who have already searched in vain for an old version of a website will appreciate the presented tools. The Wayback Machine is probably the most comprehensive web site archive. Its user-friendly interface makes it easy even for inexperienced users to view or archive websites in the past. If you're looking for recently lost web pages, the Google cache can help. WebCite, on the other hand, relies on a verification process before web pages are added to the archive. This service is very well suited for academic texts that require comprehensive source references.