What is a convolutional neural network (CNN)?

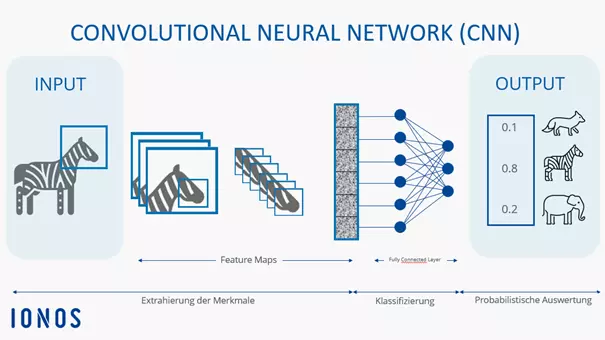

Convolutional neural networks (ConvNets, CNNs) are artificial neural networks whose layers (convolutional layers) are applied to input data to extract features and ultimately identify an object. ConvNets are essential to deep learning.

What are convolutional neural networks (CNN)?

A convolutional neural network is a specialised type of artificial neural network that is particularly effective at processing and analysing visual data such as images and videos. CNNs are crucial in machine learning, especially in the ML subset deep learning.

ConvNets consist of node layers, including an input layer, one or more hidden layers and an output layer. The individual nodes are interconnected, and each one has a weight and threshold associated with it. Once the output of a single node exceeds its specified threshold, it activates and sends data to the next layer of the network.

Different types of neural networks are used for different types of data and use cases. For example, recurrent neural networks are often used for processing natural language and speech recognition, while convolutional neural networks (CNNs) are more commonly employed for classification and computer vision tasks. The ability of neural networks to recognise complex patterns in data makes them a significant tool in artificial intelligence.

- Get online faster with AI tools

- Fast-track growth with AI marketing

- Save time, maximise results

How do convolutional neural networks work?

ConvNets distinguish themselves from other neural networks with their superior performance in processing image, speech and audio signals. They have three main types of layers, and with each layer, the CNN becomes more complex and is able to identify larger parts of an image, for example.

How the ConvNet algorithm processes images

Computers recognise images as number combinations, or more specifically, as pixel values. The CNN algorithm does this as well. For example, a black-and-white image with length m and width n is represented as a 2-dimensional array of size mXn. With a colour image of the same size, a 3-dimensional array is used. Each cell in the array contains the corresponding pixel value, and each image is represented by the respective pixel values in three different channels, corresponding to a red, blue and green channel.

Next, the most important features of the image are identified. These are extracted using a method known as convolution. This is an operation where one function modifies (folds) the shape of another function. Image sharpening, smoothing and enhancement are common ways that convolutions are used for images. However, in the case of CNNs, convolutions are employed to extract significant features from images.

A filter or kernel is used to extract key features from an image. A filter is an array that represents a specific feature that should be extracted. The filter is applied over the input array, and the resulting array is a two-dimensional array that shows where and how strongly the feature appears in the image. The output matrix is known as a feature map.

Characteristics of the different convolution layers

During the convolution process, the input field is transformed into a smaller field that retains the spatial correlation between pixels by applying filters. Below we’ll take a look at the three main types of convolution layers.

- Convolutional layer: This layer is the first layer of a convolutional network. Using filters (small weight matrices) that slide over the images, the layer is able to recognise local features such as edges, corners and textures. Each filter creates a feature map that highlights specific patterns. More than one convolutional layer can be used, creating a hierarchical structure in the CNN, whereby the subsequent layers can see the pixels located in the receptive fields of the previous layers.

- Pooling layer: This layer reduces the size of the feature maps by summarising local areas and discarding irrelevant information. This reduces computational complexity while ensuring that the most important information is retained.

- Fully-connected layer: Similar to the structure in a natural neural network, this layer connects all the neurons. Used for making the final classification, it combines the extracted features to identify an object in an image.

A more detailed look at the convolution process

Imagine you are trying to determine whether an image contains a human face. You can think of the face as the sum of its parts: two eyes, a nose, a mouth, two ears, etc. This is what the convolution process looks like.

- First convolutional layers: The first convolutional layers use filters to recognise features from individual pixels. For example, a filter might recognise a vertical edge representing the edge of an eye. As mentioned, local features form patterns registered as feature maps during convolution. In this case, a feature map might represent the edges of the eyes, the nose and the mouth.

- Additional convolutional layers: Following the first convolutional layers, additional ones can be applied or the pooling layers can be applied. The subsequent convolutional layers combine simple features into more complex patterns. The individual patterns gradually form a face. For example, edges and corners can be combined into shapes like eyes. The layers that are added see larger areas of the image (receptive fields) and recognise composite structures, known as feature hierarchies within the convolution layers. A layer that is added later would be able to recognise that when two eyes and a mouth are arranged in a certain way, they form a face.

- Pooling layers: These reduce the size of the feature maps and further abstract the features. This reduces the amount of data that needs to be processed while still retaining the essential features.

- Fully-connected layer: The last layer of the ConvNet is the fully-connected layer. In this layer, the CNN would produce the image of a human face, which thanks to the convolutions would be clearly distinguishable from another face.

Additionally, techniques such as dropout and regularisation optimise the CNNs by preventing overfitting from occurring. Activation functions like ReLU (Rectified Linear Unit) introduce non-linearity and help the network recognise more complex patterns by ensuring not all neurons perform the same calculations. Additionally, batch normalisation stabilises and speeds up training by processing data more evenly.

What can convolutional neural networks be used for?

Before CNNs existed, objects were identified in images using time-consuming feature extraction methods that had to be carried out manually. Convolutional neural networks offer a more scalable approach to image classification and object detection. Employing principles of linear algebra, (in particular, matrix multiplication), CNNs are able to recognise patterns in an image. They are now widely used in:

- Image and speech recognition: CNNs automatically recognise objects or people in images and videos, for example, for photo-tagging in smartphones, facial recognition systems and voice assistants like Siri or Alexa.

- Medical diagnostics: Here, AI image recognition technology enhances diagnostics by aiding in the analysis of medical images such as X-rays, CT scans and MRIs.

- Autonomous vehicles: ConvNets are used, for example, in self-driving cars to recognise road features and obstacles.

- Social Media: CNNs are used for text mining, which allows social media platforms to automatically moderate content and create personalised advertising.

- Marketing and retail: CNNs are used to mine data, enabling visual product searches and product placement.

- 100% GDPR-compliant and securely hosted in Europe

- One platform for the most powerful AI models

- No vendor lock-in with open source

What are the advantages and disadvantages of convolutional neural networks?

Convolutional neural networks can automatically extract relevant features from data and they also achieve a high level of accuracy. However, training CNNs effectively requires a substantial amount of computational resources, including large volumes of labelled data and powerful GPUs, to produce optimal results.

| Advantages | Disadvantages |

|---|---|

| Automatic feature extraction | High computational requirements |

| High level of accuracy | Large datasets needed |

CNNs have revolutionised the field of artificial intelligence and offer immense benefits across various sectors. Further developments, such as hardware improvements, new data collection methods and advanced architectures like Capsule Networks, can further optimise CNNs and integrate them into more technologies, making it possible to use them for a wider range of use cases.