Encryption methods

The word encryption refers to a method by which plain text is converted into an incomprehensible sequence using a key. In the best case scenario, the content of the encrypted text is only accessible to the user who has the key to read it. The terms “plaintext” and “ciphertext” have historically been used when talking about encryption. In addition to text messages, modern encryption methods can also be applied to other electronically transmitted information such as voice messages, image files, or programme code.

Encryption is used to protect files, drives, or directories from unwanted access or to transmit data confidentially. In ancient times, simple encryption methods were used which were primarily restricted to coding the information that was to be protected. Individual characters of plaintext, words, or entire sentences within the message are rearranged (transposition) or replaced by alternative character combinations (substitution).

To be able to decode such an encrypted text, the recipient needs to know the rule used to encrypt it. Permutations in the context of transposition methods are usually carried out using a matrix. To rewrite a transposed ciphertext into plaintext, you have to either know or reconstruct the transposition matrix. Substitution methods are based on a tabular assignment of plaintext characters and ciphers in the form of a secret code book.

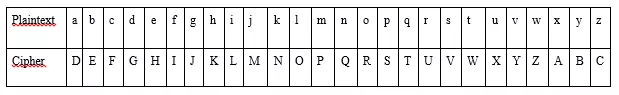

One of the first and easiest encryption methods of this type goes all the way back to Julius Caesar. The so-called Caesar cipher is based on monoalphabetic substitution. To protect his military correspondence from enemy spies, the emperor shifted the letters of each word by three steps in the alphabet. The resulting encryption code went as follows:

The number of steps by which the characters are moved counts as the key in this type of encryption. This isn’t given as a number, but instead as the corresponding letter in the alphabet. A shift by three digits uses “C” as its key.

While the principle behind the Caesar cipher is relatively simple, today’s ciphers are based on much a more complex mathematical process, called an algorithm, several of which combine together various different substitutions and transmutations using keys in the form of passwords or binary codes as their parameters. These methods are much more difficult for crypto-analysts (code-crackers) to decipher. Many established cryptosystems created using modern means are considered uncrackable.

How does encryption work?

An encryption method is formed from two basic components: a cryptographic algorithm and at least one cipher key. While the algorithm describes the encryption method (for example, “move each letter along the order of the alphabet”), the key provide the parameters (“C = Three steps”). An encryption can therefore be described as a method by which plaintext and a key are passed through a cryptographic algorithm, and a secret text is produced.

Digital keys

Modern cipher methods use digital keys in the form of bit sequences. An essential criteria for the security of the encryption is the key length in bits. This specifies the logarithmic units for the number of possible key combinations. It is also referred to in such as case as key space. The bigger the key space, the more resilient the encryption will be against brute force attacks – a cracking method based on testing all possible combinations.

To explain brute force attacks, we’ll use the Caesar cipher as an example. Its key space is 25 – which corresponds to a key length of less than 5 bits. A code-cracker only needs to try 25 combinations in order to decipher the plaintext, so encryption using the Caesar cipher doesn’t constitute a serious obstacle. Modern encryption methods, on the other hand, use keys which can provide significantly more defense. The Advanced Encryption Standard (AES), for example, offers the possibility to select key lengths of either 128, 192, or 256 bits. The key length of this method is accordingly large, as a result.

Even with 128-bit encryption, 2128 conditions can be mapped. These correspond to more than 240 sextillion possible key combinations. If AES works with 256 bits, the number of possible key is more than 115 quattuorvigintillion (or 1075). Even with the appropriate technical equipment, it would take an eternity to try out all of the possible combinations. With today’s technology, brute force attacks on the AES key length are simply not practical. According to the EE Times, it would take a supercomputer 1 billion billion years to crack a 128-bit AES key, which is more than the age of the universe.

Kerckhoffs’ principle

Because of the long key lengths, brute force attacks against modern encryption methods are no longer a threat. Instead, code-crackers look for weaknesses in the algorithm that make it possible to reduce the computation time for decrypting the encrypted data. Another approach is focused on so-called side channel attacks that target the implementation of a cryptosystem in a device or software. The secrecy of an encryption process is rather counterproductive for its security.

According to Kerckhoffs’ principle, the security of a cryptosystem is not based on the secrecy of the algorithm, but on the key. As early as 1883, Auguste Kerckhoffs formulated one of the principles of modern cryptography: To encrypt a plaintext reliably, all you really need is to do is provide a well-known mathematical method with secret parameters. This theory remains basically unchanged today.

Like many other areas of software engineering, the development of encryption mechanisms is based on the assumption that open source doesn’t compromise the security. Instead, by consistently applying Kerckhoffs’ principle, errors in the cryptographic algorithm can be discovered quickly, since methods which hope to be adequate have to hold their own in the critical eyes of the professional world.

Key expansion: From password to key

Users who want to encrypt or unencrypt files generally don’t come into contact with keys, but instead use a more manageable sequence: a password. Secure passwords have between 8 and 12 characters, use a combination of letters, numbers, and special characters, and have a decisive advantage over bit sequences: People can remember passwords without requiring much effort.

As a key, though, passwords aren’t suitable for encryption because of their short length. Many encryption methods then include an intermediate step where passwords of any length are converted into a fixed bit sequence corresponding to the cryptosystem in use. There are also methods that randomly generate keys within the scope of the technical possibilities.

A popular method of creating keys from passwords is PBKDF2 (Password- Based Key Derivation Function 2). In this method, passwords are supplemented by a pseudo-random string, called the salt value, and then mapped to a bit sequence of the desired length using cryptographic hash functions.

Hash functions are collision-proof one-way functions – calculations which are relatively easy to carry out, but take a considerable effort to be reversed. In cryptography, a method is considered secure when different hashes are assigned to different documents. Passwords that are converted into keys using a one-way function can only be reconstructed with considerable computational effort. This is similar to searching in a phone book: While it’s easy to search for the phone number matching a given name, searching for a name using only a telephone number can be an impossible attempt.

In the framework of PBKDF2, the mathematical calculation is carried out in several iterations (repetitions) in order to protect the key that’s been generated against brute force attacks. The salt value increases the reconstruction effort of a password on the basis of rainbow tables. A rainbow table is an attack pattern used by code-crackers to close out stored hash values to an unknown password.

Other password hashing methods are scrypt, bcrypt, and LM-Hash, however the latter is considered outdated and unsafe.

The problem with key distribution

A central problem of cryptology is the question of how information can be encrypted in one place and decrypted in another. Even Julius Caesar was faced with the problem of key distribution. If he wanted to send an encrypted message from Rome to the Germanic front, he had to make sure that whoever was going to receive it would be able to decipher the secret message. The only solution: The messenger had to transmit not only the secret message, but also the key. But how to transfer the key without running the risk of it falling into the wrong hands?

The same question keeps cryptologists busy today as they try to transfer encrypted data over the internet. Suggested solutions are incorporated in the development of various cryptosystems and key exchange protocols. The key distribution problem of symmetric cryptosystems can be regarded as the main motivation for the development of asymmetric encryption methods.

Classifying encryption methods

In modern cryptology, a distinction is made between symmetric and asymmetric encryption methods. This classification is based on key handling.

With symmetric cryptosystems, the same key is used both for the encryption and the decryption of coded data. If two communicating parties want to exchange encrypted data, they also need to find a way to secretly transmit the shared key. With asymmetric cryptosystems on the other hand, every partner in the communication generates their own pair of key: a public key and a private key.

When symmetric and asymmetric encryption methods are used in combination, it’s called a hybrid method.

Symmetric encryption methods

Since the 1970s, encryptions of information have been based on symmetric cryptosystems whose origins lie in ancient methods like the Caesar cipher. The main principle of symmetric encryption is that encryption and decryption are done using the same key. If two parties want to communicate via encryption, both the sender and the receiver need to have copies of the same key. To protect encrypted information from being accessed by third parties, the symmetric key is kept secret. This is referred to as a private key method.

While classic symmetric encryption methods work on the letter level to rewrite the plaintext into its secret version, ciphering is carried out on computer-supported cryptosystems at the bit level. So a distinction is made here between stream ciphers and block ciphers.

- Stream ciphers: Each character or bit of the plaintext is linked to a character or bit of the keystream that is translated into a ciphered output character.

- Block ciphers: The characters or bits to be encrypted are combined into fixed-length blocks and mapped to a fixed-length cipher.

Popular encryption methods for symmetric cryptosystems are relatively simple operations such as substitution and transposition that are combined in modern methods and applied to the plaintext in several successive rounds (iterations). Additions, multiplications, modular arithmetic, and XOR operations are also incorporated into modern symmetric encryption algorithms.

Well-known symmetric encryption methods include the now-outdated Data Encryption Standard (DES) and its successor, the Advanced Encryption Standard (AES).

Data Encryption Standard (DES)

DES is a symmetric encryption method that was developed by IBM in the 1970s and standardised in 1977 by the US National Institute of Standards and Technology (NIST). By the standards of the time, DES was a secure, computer-assisted encryption method and formed the basis for modern cryptography. The standard is not patented, but due to the relatively low key length of 64 bits (effectively only 56 bits), it’s basically obsolete for use today. As early as 1994, the cryptosystem was cracked with only twelve HP-9735 workstations and a 50-day computing effort. With today’s technology, a DES key can be cracked in just a few hours using brute force attacks.

The symmetric algorithm of a block encryption works at the bit level. The plaintext is broken down into blocks of 64 bits, which are then individually encrypted with a 64-bit key. In this way, the 64-bit plaintext is translated into 64-bit secret text. Since each eighth bit of the key acts as a parity bit (or check bit), only 56 bits are available for encryption.

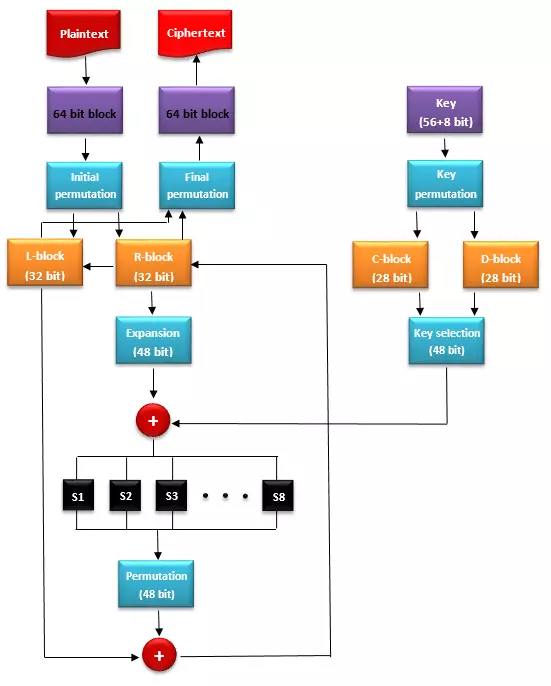

The DES encryption algorithm is a so-called Feistel network and is based on a combination of substitutions and transpositions performed in 16 iterations. The process, named after the IBM employee Horst Feistel, can be described in four steps:

1. Initial permutation: The included 64-bit plaintext block is subjected to an input permutation, which changes the order of the bits. The result of this permutation is written into two 32-bit registers. This results in a left block half (L) and a right block half (R).

2. Key permutation: The 56 bits of the key that are relevant for the encryption are also subjected to a permutation, and then divided into two 28-bit blocks (C and D). For every 16 iterations, a round key is generated from both key blocks C and D. To do this, the bits of both block halves are shifted cyclically to the left by 1 or 2 bits, respectively. This should ensure that in every encryption round, a different round key is included. Afterward, the two half blocks C and D are mapped onto a 48-bit round key.

3. Encryption round: Every encryption round includes the steps a) through d). For each loop, a half block R and a round key are inserted in the encryption. The half block L initially remains outside.

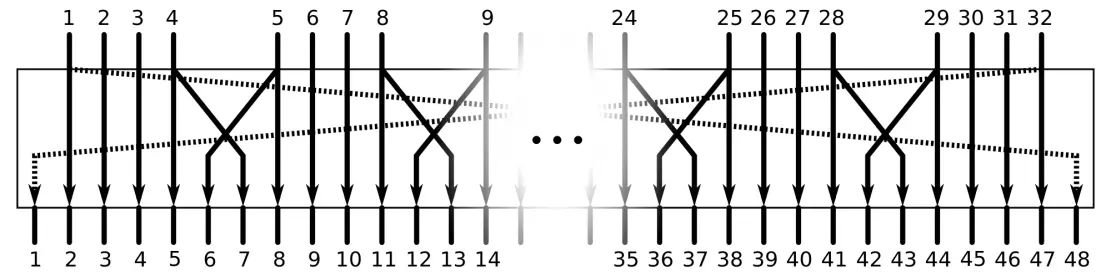

- Expansion: The half block R is extended to a 48-bit block using expansion. For this, the 32 bits of the half block are divided into 4-bit groups as part of the expansion, and then are partially duplicated and permuted according to the following scheme:

- XOR-link of data block and round key: In every encryption round, a 48-bit expanded block R is linked with a 48-bit round key using an XOR operation. The result of the XOR-link is in turn a 48-bit block.

- S-boxes (substitution boxes): Following the XOR operation, the 48-bit block is split into eight 6-bit blocks, substituted by eight 4-bit blocks using S-boxes, and then combined into a 32-bit block. The result of the substitution boxes is again subjected to a permutation.

- XOR-link of R block and L block: After every encryption round, the result of the S-boxes is linked with the as-yet unused L blocks via XOR. The result is a 32-bit block that then enters the second encryption phase as a new R block. The R block of the first round serves as the L block of the second round.

4. Final permutation: If all 16 encryption sessions have been completed, the L and R blocks are combined into a 64-bit block and subjected to an inverse output permutation for the final permutation. The plaintext is encrypted.

The following graphic shows a schematic representation of the DES algorithm. The XOR-links are marked as red circles with white crosses.

The deciphering of DES encrypted secret texts follows the same scheme, but in the reverse order.

A major criticism of DES is the small key length of 56 bits results in comparatively small key space. This length can no longer withstand the brute force attacks that are available with today’s computing power. The method of key permutation, which generates 16 nearly identical round key, is considered too weak.

A variant of DES was developed with Triple-DES (3DES), where the encryption method runs in three successive rounds. But the effective key length of 3DES is still only 112 bits, which remains below today’s minimum standard of 128 bits. Additionally, 3DES is also more computer-intensive than DES.

The Data Encryption Standard has therefore largely been replaced. The Advanced Encryption Standard algorithm has taken over as its successor, and is also a symmetric encryption.

Advanced Encryption Standard (AES)

In the 1990s, it became apparent that DES, the most commonly used encryption standard, was no longer technologically up to date. A new encryption standard was needed. As its replacement, developers Vincent Rijmen and Joan Daemen established the Rijndael Algorithm (pronounced “Rain-dahl”) – a method that, due to its security, flexibility, and performance, was implemented as a public tender and certified by NIST as the Advanced Encryption Standard (AES) at the end of 2000. AES also divides the encrypted plaintext into blocks, so the system is based on block encryption just like DES. The standard supports 128, 192, and 256 bit keys. But instead of 64-bit blocks, AES uses significantly larger 128-bit blocks, which are encrypted using a substitution permutation network (SPN) in several successive rounds. The DES-successor also uses a new round key for each encryption round, which is derived recursively from the output key and is linked with the data block to be encrypted using XOR. The encryption process is divided into roughly four steps: 1. Key expansion: AES, like DES, uses a new round key for every encryption loop. This is derived from the output key via recursion. In the process, the length of the output key is also expanded to make it possible to map the required number of 128-bit round keys. Every round key is based on a partial section of the expanded output key. The number of required round keys is equal to the number of rounds (R), including the key round, plus a round key for the preliminary round (number of keys = R + 1). 2. Preliminary round: In the preliminary round, the 128-bit input block is transferred to a two-dimensional table (Array) and linked to the first round key using XOR (KeyAddition). The table is made up of four rows and four columns. Each cell contains one byte (8 bits) of the block to be encrypted. 3. Encryption rounds: The number of encryption rounds depends on the key length used: 10 rounds for AES128, 12 rounds for AES192, and 14 rounds for AES256. Each encryption round uses the following operations:

- SubBytes: SubBytes is a monoalphabetic substitution. Each byte in the block to be encrypted is replaced by an equivalent using an S-box.

- ShiftRows: In the ShiftRow transformation, bytes in the array cells (see preliminary round) are shifted continually to the left.

- MixColumns: With MixColumns, the AES algorithm uses a transformation whereby the data is mixed within the array columns. This step is based on a recalculation of each individual cell. To do this, the columns of the array are subjected to a matrix multiplication and the results are linked using XOR.

- KeyAddition: At the end of each encryption round, another KeyAddition takes place. This, just like the preliminary round, is based on an XOR link of the data block with the current round key.

4. Key round: The key round is the last encryption round. Unlike the previous rounds, it doesn’t include MixColumn transformations, and so only includes the SubBytes, ShiftRows, and KeyAddition operations. The result of the final round is the ciphertext. The encryption of AES-ciphered data is based on the investment of the encryption algorithm. This refers to not only the sequence of the steps, but also the operations ShiftRow, MixColumns, and SubBytes, whose directions are reversed as well. AES is certified for providing a high level of security as a result of its algorithm. Even today, there have been no known practical relevance attacks. Brute force attacks are inefficient against the key length of at least 128 bits. Operations such as ShiftRows and MixColumns also ensure optimal mixing of the bits: As a result, each bit depends on a key. The cryptosystem is reassuring not just because of these measures, but also due to its simple implementation and high level of secrecy. AES is used, among other things, as an encryption standard for WPA2, SSH, and IPSec. The algorithm is also used to encrypt compressed file archives such as 7-Zip or RAR. AES-encrypted data is safe from third-party access, but only so long as the key remains a secret. Since the same key is used for encryption and decryption, the cryptosystem is affected by the key distribution problem just like any other symmetric method. The secure use of AES is restricted to application fields that either don’t require a key exchange or allow the exchange to happen via a secure channel. But ciphered communication over the internet requires that the data can be encrypted on one computer and decrypted on another. This is where asymmetrical cryptosystems have been implemented, which enable a secure exchange of symmetrical keys or work without the exchange of a common key. Available alternatives to AES are the symmetric cryptosystems MARS, RC6, Serpent, and Twofish, which are also based on a block encryption and were among the finalists of the AES tender alongside Rjindael. Blowfish, the predecessor to Twofish, is also still in use. Salsa20, which was developed by Daniel J. Bernstein in 2005, is one of the finalists of the European eSTREAM Project.

Asymmetric encryption methods

While for symmetric cryptosystems, the same key is used on both sides of the encrypted communication, the two parties of an asymmetrically encrypted communicated generate a key pair for each page. Each participant in the communication has two keys at their disposal: one public key and one private. To be able to encrypt information, each party has to declare their public key. This generally happens over a key server and is referred to as a public key method. The private key stays a secret. This is the strength of the asymmetric cryptosystem: As opposed to in a symmetric encryption method, the secret key never leaves its owner’s sight.

Public keys are used for encryption in an asymmetric encryption. They allow for digital signatures to be tested and users to be verified. Private keys, on the other hand, are used for decryption and enable the generation of digital signatures or their authentication against other users.

An example:

User A would like to send user B an encrypted message. For this, A needs the public key of B. The public key of B allows A to encrypt a message that only the private key of B is able to decrypt. Except for B, nobody is capable of reading the message. Even A has no way to decrypt the encryption.

The advantage of asymmetric encryption is the principle that anyone can use the public key of user B to encrypt messages, which can then only be deciphered by B using its secret key. Since only the public key is exchanged, there is no need to create a tamper-proof, secure channel.

The disadvantage of this encryption method is that B can’t be sure that the encrypted message actually comes from A. Theoretically, a third user (C) could use the public key of B to encrypt a message – for example, to spread malware. In addition, A can’t be sure that the public key really belongs to B. A public key could have been created by C and given as the key of B to intercept message from A to B. For asymmetric encryptions, a mechanism is needed so that users can test the authentication of their communication partners.

Digital certificates and signatures are solutions to this problem.

- Digital certificates: To secure asymmetric encryption methods, communication partners can have the authenticity of their public keys confirmed by an official certification body. A popular standard for public key certification is X.509. This is used, for example, in TLS/SSL encrypted data transmission via HTTPS or as part of the e-mail encryption via S/MIME.

- Digital signatures: While digital certificates can be used to verify public keys, digital signatures are used to identify the sender of an encrypted message beyond a doubt. A private key is used to generate a signature. The receiver then verifies this signature using the public key of the sender. The authenticity of the digital signature is secured through a hierarchically structured public key infrastructure (PKI). A decentralized alternative to the hierarchical PKI is the so-called Web of Trust (WoT), a network in which users can directly and indirectly verify each other. The first scientific publication of asymmetric encryption methods was made in 1977 by the mathematicians Rivest, Shamir, and Adleman. The RSA method, which is named after the inventors, is based on one-way functions with a trapdoor and can be used for encrypting data and also as a signature method.

Rivest, Shamir, Adleman (RSA)

RSA is one of the safest and best-described public key methods. The idea of encryption using a public encryption key and a secret decryption key can be traced back to the cryptologists Whitfield Diffie and Martin Hellman. This method was published in 1976 as the Diffie-Hellman key exchange, which enables two communication partners to agree on a secret key over an unsecure channel. The researchers focused on Merkle’s puzzles, developed by Ralph Merkle, so it is sometimes also called the Diffie-Hellman-Merkle key exchange (DHM).

The cryptologists used mathematical one-way functions, which are easy to implement, but can only be reversed with considerable computational effort. Even today, the key exchanged named after them is used to negotiated secret keys within symmetric cryptosystems. Another merit of the duo’s research is the concept of the trapdoor. In the Diffie-Hellman key exchange publication, the hidden abbreviations are already addressed which are intended to make it possible to speed up the inversion of a one-way function. Diffie and Hellman didn’t provide concrete proof, but their theory of the trapdoor encourage various cryptologists to carry out their own investigations.

Rivest, Shamir, and Adleman also searched for one-way function abbreviations – originally with the intention of refuting the trapdoor theory. But their research ended up taking another direction, and instead resulted in the encryption process eventually named after them. Today, RSA is the first scientifically published algorithm that allows for the transfer of encrypted data without the use of a secret key.

The RSA uses an algorithm that is based on the multiplication of large prime numbers. While there generally aren’t any problems with multiplying two primes by 100, 200, or 300 digits, there is still no efficient algorithm that can reverse the result of such a computation into its prime factors. Prime factorisation can be explained by this example:

If you multiply the prime number 14,629 and 30,491 you’ll get the product 446,052,839. This has only four possible divisors: One is itself, and two others are the original prime numbers that have been multiplied. If you divide the first two divisors you’ll get the initial values of 14,629 and 30,491, since each number is divisible by 1 and by itself.

This theme is the basis of the RSA key generation. Both the public and the private key represent two pairs of numbers:

Public key: (e, N)

Private key: (d, N)

N is the so-called RSA module. This is contained in both keys and results from the product of two randomly selected, very large primes p and q (N = p x q).

To generate the public key, you need e, a number that is randomly selected according to certain restrictions. Come N and e to get the public key which is given in plaintext to each communication partner.

To generate the private key, you need N as well as d as they contain the value resulting from the randomly generated primes p and q, as well as the random number e, which is computed based on Euler’s phi function (ϕ).

Which prime numbers go into the computation of the private key has to remain secret to guarantee the security of the RSA encryption. The product of both prime numbers, however, can be used in the public key in plaintext, since it’s assumed that today there’s no efficient algorithm that can reverse the product into its prime factor numbers in an appropriate amount of time. It’s also not possible to computer p and q from N, unless the calculation can be abbreviated. This trapdoor represents the value d, which is known only to the owner of the private key.

The security of the RSA algorithm is highly dependent on technical progress. Computing power doubles about every two years, and so it’s not out of the realm of possibility that an efficient method for primary factor decomposition will be available in the foreseeable future to match the methods that are available today. In this case, RSA offers the possibility to adapt the algorithm to keep up with this technical progress by using even higher prime numbers in the computation of the key.

For a secure operation of the RSA process, recommendations are provided by NIST that specifies the number of bits and suggested N values for different levels of security as well as different key lengths.

ID-based public key method

The central difficulty with asymmetrical encryption methods is the user authentication. In classic public key methods, the public key is not associated at all with the identity of its user. If a third party is able to use the public key by pretending to be one of the parties involved in the encrypted data transfer, then the whole cryptosystem can be removed without having to directly attack the algorithm or a private decryption key. In 1984, Adi Shamir, one of the developers of RSA, proposed an ID-based cryptosystem that is based on the asymmetric approach but attempts to overcome its weakness. In identity-based encryption (IBE), the public key of a communication partner is not generated with any dependence on the private key, but instead from a unique ID. Depending on the context, for example, an e-mail address or domain could be used. This eliminates the need to authenticate public keys through official certification bodies. Instead, the private key generator (PKG) takes its place. This is a central algorithm, with which the recipient of an encrypted message can issue a decryption key belonging to their identity. The theory of ID-based encryption solves a basic problem of the asymmetrical cryptosystem, and introduces two other security gaps instead: a central point of criticism is the question of how the private decryption key is transferred from the PKG to the recipient of the encrypted message. Here the familiar key problem arises again. Another downside to the ID-based method is the circumstance where there’s another entity next to the recipient of a ciphered message who then also finds out the decryption key from the PKG. The key is then, by definition, no longer private and can be misused. Theoretically, the PKG is able to decrypt all unauthorised messages. This can be done by generating the key pair using open source software on your own computer. The most popular ID-based method can be traced back to Dan Boneh and Matthew K. Franklin.

Hybrid methods

Hybrid encryption is the joining of symmetric and asymmetric cryptosystems in the context of data transmission on the internet. The goal of this combination is to compensate for the weaknesses of one system using the strengths of the other.

Symmetric cryptosystems like AES (Advanced Encryption Standard) are regarded as safe by today’s technical standards and enable large amounts of data to be processed quickly and efficiently. The design of the algorithm is based on a shared private key that must be exchanged between the receiver and the sender of the ciphered message. But users of symmetric methods are faced with the problem of key distribution. This can be solved through asymmetric cryptosystems. Methods like RSA depend on a strict separation of public and private keys that enables the transfer of a private key.

But RSA only provides reliable protection again cryptanalysis with a sufficiently large key length, which must be at least 1,976 bits. This results in long computing operations that disqualify the algorithm for the encryption and decryption of large amounts of data. In addition, the secret text to be transmitted is significantly larger than the original plaintext after going through an RSA cipher.

In hybrid encryption methods, asymmetric algorithms aren’t used to encrypt useful data, but instead to secure the transmission of a symmetric session key over an unprotected public channel. This in turn makes it possible to decrypt a ciphertext with the help of symmetric algorithms.

The process of a hybrid encryption can be describe in three steps:

1. Key management: With hybrid methods, the symmetrical encryption of a message is framed by an asymmetrical encryption method, so an asymmetric (a) as well as a symmetric (b) key must be generated.

- Before the encrypted data transfer occurs, both communication partners first must generate an asymmetric key pair: a public key and a private key. Afterwards the exchange of the public keys takes place – in the best case, secured through an authentication mechanism. The asymmetric key pair serves to both cipher and decipher a symmetric session key, and so is generally used multiple times.

- The encryption and decryption of the plaintext is performed using the symmetrical session key. This is generated anew by the message sender for each encryption process.

2. Encryption: If an extensive message is to be transmitted securely over the internet, the sender must first generate a symmetrical session key with which the user data can be encrypted. When this is done, the recipient’s public key is then used for the asymmetrical ciphering of the session key. Both the user data and the symmetric key are then available in a ciphered form and can safely be sent.

3. Decryption: The encrypted text is sent to the recipient together with the encrypted session key, who needs their private key in order to asymmetrically decrypt the session key. This decrypted key is then used to decrypt the symmetrically ciphered user data.

According to this formula, an efficient encryption method can be implemented with which it’s possible to safely encrypt and decrypt an even large amount of user data at a higher speed. Since only a short session key is asymmetrically encrypted, the comparatively long calculation times of asymmetric algorithms in hybrid encryption are not important. The key distribution problem of the symmetric encryption method is reduced through the asymmetric encryption of session keys to simply be a problem of user authentication. This is solved in asymmetric cryptosystems through digital signatures and certificates.

Hybrid encryption methods are used in the form of IPSec, with the secured communication taking place over unsecured IP networks. The Hypertext Transfer Protocol Secure (HTTPS) also uses TLS/SSL to create a hybrid encryption protocol that combines symmetric and asymmetric cryptosystems. The implementation of hybrid methods is the basis for encryption standards such as Pretty Good Privacy (PGP), GnuPG, and S/MIME that are used in the context of e-mail encryption.

A popular combination for hybrid encryption methods is the symmetric encryption of user data via AES and the subsequent asymmetric encryption of the session key by means of RSA. Alternatively, the session key is arranged according to the Diffie-Hellman method. This can serve as Ephemeral Diffie-Hellman Forward Secrecy, but is prone to man-in-the-middle attacks. A replacement for the RSA algorithm is the Elgamal cryptosystem. In 1985, Taher Elgamal developed the public key method, which is also based on the idea of the Diffie-Hellman key exchange and is used in the current version of the PGP encryption programme.