Docker Alternatives

With the Docker container platform, virtualisation on an operating system level (Operating-system-level virtualisation) is making a comeback. In just a few years, the developer team managed to revive the container technology based on core functionality. Docker has established itself beyond the boundaries of the Linux universe as a resource-saving alternative to hypervisor-supported virtualisation through hardware emulation.

Thanks to the medial echo and a constantly growing ecosystem, Docker has emerged as a technological market leader in the field of container-based virtualisation. However, the lean container platform is by no means the only software project in which virtualisation techniques are developed on an operating system level.

Which Docker alternatives are available? And how do they differ from what the market leader offers?

Container technology on Linux

When it comes to Unix-like systems such as Linux, virtualisation on operating system level is usually based on the further implementation of native Chroot mechanisms. In addition, container projects such as Docker, rkt, LXC, LXD, Linux VServer, OpenVZ / Virtuozzo, and runC use resource-management native Linux kernel functionalities to implement isolated runtime environments for applications or the entire operating system.

Chroot stands for 'change root', a command line command, which is available on Unix-like operating systems, and changes the root directory for a running process and its child processes. An application that runs in such a modified environment (Chroot jail) isn’t able to access any other files outside of the selected directory. Since Chroot has not been developed as a security feature, this isolation is relatively easy to overcome.

Docker

If you work with software containers, you’ll come across the name Docker sooner or later. The open source project from the software company of the same name, Docker Inc., managed to make the container technology a hot topic in the areas of software development, DevOps, and continuous delivery within a few years.

Ranjit Karmakarshutterstock

Ranjit KarmakarshutterstockDocker relies on basic Linux kernel functions to shield processes from each other, and enables applications to run parallel in isolated containers using the self-developed runtime environment runC – without resource-intensive guest systems. This makes the lean container platform an attractive alternative to hypervisor-based virtualisation. A detailed description of the Docker platform and its basic components can be found in our Docker tutorial for beginners.

The Docker container platform is distributed as a free Community Edition (CE), but there is also a fee-based Enterprise (EE) version available. The platform provides support for various Linux distributions. In addition, Docker CE has been available as a native app for Windows and MacOS since the release of version 1.12. Docker for Mac relies on the sleek hypervisor xhyve, but to create a runtime environment for the Docker engine and Linux kernel-specific functions for the Docker daemon, the hypervisor Hyper-V is available on Windows for the same purpose.

Since September 2016, Docker EE has also been available natively for Windows Server 2016. By collaborating closely with the Docker development team, Microsoft has installed several extensions into the Windows core, which enable Docker to start processes as containers in a sandbox without an abstracting hypervisor. Microsoft has made the source code of the specially developed driver hcsshim available to its open source community via GitHub.

The following table shows the key features of the Docker platform:

System requirements and supported systems

| Required Linux kernel | Linux version 3.10 or higher |

|---|---|

| Supported Linux distributions | Docker Community Edition (CE): Ubuntu, Debian, CentOS, and Fedora, Docker Enterprise Edition (EE): Ubuntu, Red Hat Enterprise Linux, CentOS, Oracle Linux, and SUSE Linux Enterprise Server |

| Other platforms | Docker Community Edition (CE): Microsoft Windows 10 (Pro, Enterprise or Education with 64 Bit), macOS (Yosemite 10.10.3 or higher), Microsoft Azure, Amazon Web Services (AWS), Docker Enterprise Edition (EE): Microsoft Windows Server 2016, Microsoft Windows 10 (Pro, Enterprise or Education with 64 Bit), Microsoft Azure, Amazon Web Services (AWS) |

| Container format | Docker container |

| Licence | Apache 2.0 |

| Programming language | Go |

A lively ecosystem has developed around the Docker core project over time. According to the developer, the Docker engine is involved in more than 100,000 third-party projects.

The main criterion for implementing the Linux container technology as part of the Docker project is the degree of isolation of individual processes on a common host system. When running application containers, Docker uses native Linux kernel features such as cgroups and namespaces. However, these encapsulated containers are not the same size, which is the case with full virtualisation based on virtual machines. To ensure safe operation of application containers on productive systems, newer versions of the container platform support kernel extensions, such as AppArmor, SELinux, Seccomp, and GRSEC, can additionally shield isolated processes.

| Advantages | Disadvantages |

|---|---|

| Docker supports various operating systems and cloud platforms | The Docker engine only supports its own container format |

| The Docker platform offers native orchestration and cluster management tools with Swarm and Compose | The software is available as a monolithic program file containing all the features |

| The Docker hub provides users with a central registry for Docker resources | Docker containers only run individual processes. It doesn’t support the operation of full-system containers |

| The ever-increasing ecosystem provides users with various docking tools, plugins, and infrastructure components |

The container technology was originally developed with the aim of running several virtual operating systems in isolated environments on the same kernel. These are known as full-system containers. The Docker container platform, on the other hand, focuses on so-called application containers, in which each application runs in its own virtual environment. While full-system containers are designed so that different processes can be executed in them, an application container always contains just one single process. Extensive applications are therefore implemented as multi-container apps with Docker.

rkt from CoreOS

Docker’s biggest competition for container-based virtualisation on the market is the runtime environment, rkt (pronounced 'rocket') from the Linux distributor, CoreOS. The software project was presented in 2014. As a reason for the departure from the Docker platform, which up until then had been able to build on the support of the CoreOS development team, CEO Alex Polvi expressed dissatisfaction with how the Docker project was developing. The market leader, according to Polvi, stopped aiming to develop a standard container technology, and focused instead on marketing a monolithic application development platform.

In February 2016, CoreOS released the first stable release of the container runtime environment with rkt version 1.0. In contrast to Docker, the competitor aimed to impress with its additional security features. These include, in addition to container isolation on a KVM basis, support of the kernel extension SELinux, as well as signature validation for images of the self-developed container specification App Container (appc). This describes the image format App Container Image (ACI), the runtime environment, an image discovery mechanism, and the ability to group images into multi-container apps, known as app container pods. Unlike Docker, rkt supports other formats besides its own container images. The runtime environment is compatible with Docker images, and with the open source tool Quay any container format can be converted to ACI.

System requirements and supported systems

| Required Linux kernel | Any modern amd64 kernel |

|---|---|

| Supported Linux distributions | Arch Linux, CentOS, CoreOS, Debian, Fedora, NixOS, openSUSE, Ubuntu, Void |

| Other platforms | macOS or Windows using Vagrant virtual machine |

| Container format | appc, Docker-Container; other container images can be transferred into the rkt format using Quay |

| Licence | Apache 2.0 |

| Programming language | Go |

While the Docker platform relies on a central daemon that runs in the background with root privileges, rkt manages without these background processes and instead works with established init systems, such as systemd and upstart, to start and manage containers. This circumvents the Docker problem for users wanting to start the container via daemon, and not having the root rights and therefore only having restricted access to the host system.

Since version 1.11, containers haven’t been run directly from Docker daemons. Instead, a daemon process called containerd is used.

Unlike Docker, rkt is not limited to Linux kernel functions such as cgroups and namespaces when virtualising applications. With the runtime environment of CoreOS, containers based on KVM (kernel-based virtual machine e.g. LKVM or QEMU) and Intel Clear Container technology can also be started as fully-enclosed virtual machines.

The appc specification and its implementation rkt are supported by industry giants such as Google, Red Hat, and VMware.

| Advantages | Disadvantages |

|---|---|

| rkt supports Docker containers as well as its own container format and allows you to convert any container image via Quay to the rkt format ACI | Fewer third party integrations are available with rkt, compared to Docker |

| Technologies such as KVM and Intel’s Clear Containers technology make it possible to safely shield software containers from one another | rkt is optimised for operating application containers. Full-system containers are not supported |

LXC

The Docker alternative, LXC, is a set of tools, templates, libraries, and language bindings, which together represent a userspace interface to the native container functions of the Linux kernel. For Linux users, LXC provides a convenient way to create and manage application and system containers.

Language bindings are adapters – so-called wrappers – that bridge the gap between different programming languages, allowing different program parts to be connected to one another.

To shield processes from each other, LXC uses the following insulation techniques:

- IPC, UTS, Mount, PID, network and user namespaces

- Cgroups

- AppArmor and SELinux profile

- Seccomp rules

- Chroots

- Kernel capabilities

The aim of the LXC project is to create a software container environment that is as similar to a standard Linux installation as possible. LXC is developed alongside the open source projects LXD, LXCFS, and CGManager under the LinuxContainers.org project.

System requirements and supported systems

| Required Linux kernel | Linux Version 2.6.32 or higher |

|---|---|

| Supported Linux distributions | LXC is included in most Linux distributions, The following libraries are required: C libraries: glibc, musl libc, uclib, or bionic, Additional libraries: libcap, libapparmor, libselinux, libseccomp, libgnutls, liblua, python3-dev |

| Other platforms | None |

| Container format | Linux container (LXC) |

| Licence | GNU LGPLv2.1+ |

| Programming language | C, Python 3, Shell, Lua |

LXC was designed to run different system containers (full-system containers) on a common host system. A Linux container usually starts a complete distribution from an operating system image in a virtual environment. Users interact with it in a similar way to how they would a virtual machine.

Applications are rarely started in Linux containers. This makes the software significantly different from the Docker project. While LXC is primarily dedicated to system virtualisation, Docker focuses on virtualising individual apps and deploying them. In the beginning, Linux containers were also used, but nowadays, Docker relies on a self-developed container format.

A major difference between both virtualisation technologies is that Linux containers can contain any number of processes, whereas only one process is executed in Docker containers, so complex Docker applications usually consist of multiple containers. An effective deployment of multi-container apps like these require additional tools.

Furthermore, Docker containers and Linux containers differ in portability. If a user develops software based on LXC on a local test system, the user can’t just assume that the container will run flawlessly on other systems (e.g. a productive system). The Docker platform, however, abstracts applications much more strongly from the quality of the underlying system. This allows the same Docker container to be run on any Docker host (a system on which the Docker engine has been installed), regardless of the operating system and hardware configuration.

LXC also comes without a central daemon process. Instead, container software integrates itself into init systems such as systemd and upstart to start and manage containers.

| Advantages | Disadvantages |

|---|---|

| LXC is optimised for the operation of full-system containers | The operation of application containers does not belong to the standard application |

| There is no native implementation for operating systems other than Linux |

LXD from Canonical

As a follow-up project for LXC, the Linux distributor Canonical launched the Docker alternative LXD (pronounced 'lexdi') in November 2014. The project is based on the Linux container technology and expands it with the daemon process LXD. The software sees itself as a kind of container hypervisor. The technical structure of the container solution consists of three components: LXC serves as a command line client. Also, nova-compute-lxd is featured as an OpenStack-Nova-Plug-in. as well with niova-compute-lxd. Communication between the client and the daemon is done via a REST API.

Nova is the central computing component of the open-source cloud-based OpenStack operating system, which is used to deploy and manage virtual systems in cloud architectures. OpenStack Nova supports various virtualization technologies on a hypervisor basis and operating system level.

The aim of the software project is to provide a user experience based on Linux container technology similar to the operation of virtual machines, without having to accept the overhead of an emulation of hardware resources.

System requirements and supported systems

| Required Linux kernel | like LXC |

|---|---|

| Supported Linux distributions | Command-line client (lxc): Ubuntu 14.04 LTS, Ubuntu 16.04 LTS, nova-compute-lxd: Ubuntu 16.04 LTS |

| Other platforms | None |

| Container format | Linux container (LXC) |

| License | Apache 2.0 |

| Programming language | Go |

Like LXC, LXD focuses on supplying full-system containers. This role, as a machine management tool, differentiates LXD from Docker and rkt, whose core functions lie in the area of software deployment. LXD uses the same isolation technology as the underlying LXC project. The daemon of the container solution requires a Linux kernel. Other operating systems are not supported. Since communication with the daemon takes place via a REST API, it is possible to access the daemon remotely via a Windows or MacOS client. In a blog article from February 2017, the LXD project manager, Stéphane Graber, describes how the standard LXD client can be configured for the desired operating system.

| Advantages | Disadvantages |

|---|---|

| LXD is optimised for operating full-system containers | The operation of application containers is not a standard application |

| The LXD client can be configured for Windows and macOS and enables the LXD daemon to be controlled remotely via the REST API | The LXD daemon requires a Linux kernel |

Linux-VServer

Linux-VServer is a virtualisation technology on an operating system level, which, like software containers, is based on the Linux kernel’s isolation technologies. Several virtual units are operated on a common Linux kernel whose resources (file system, CPU, network addresses, and memory) are divided into separate partitions by a jail mechanism. In Linux-VServer terminology, partitions like these are referred to as 'security context' and are generated by standard techniques such as segmented routing, chroot, and quota. A virtualised system in a security context like this is called Virtual Private Server (VPS).

Since VPSs run as isolated processes on the same host system and use the same system call interface, there is no additional overhead through emulation.

An operating system-level virtualisation via Linux-VServer has nothing to do with the Linux Virtual Server project, in which a load-balancing technique is developed for Linux clusters.

System requirements and supported systems

| Required Linux kernel | Linux Version 2.6.19.7 or higher |

| Supported Linux distributions | all Linux distributions |

| Other platforms | None |

| Container format | With Linux-VServer a contain-like concept called SecurityContext is used. |

| Licence | GNU GPL v.2 |

| Programming language | C |

The open source project launched by Jacques Gélinas was, until recently, headed by the Austrian, Herbert Pötzl. The technology is used, for example, by webhosting providers who want to offer their customers separate virtual machines on a common hardware basis.

To provide the Linux kernel with operating system-level virtualisation features, it must be patched. This Linux kernel modification ensures that the technology is fundamentally different from Linux containers (LXC), which rely on native isolation functions with cgroups and namespaces.

The last release was the VServer 2.2 in 2007.

| Advantages | Disadvantages |

|---|---|

| While Docker can save changes in a container only by creating a new image from a running container, Linux-VServer provides a common file system for all VPSs on the host where current versions can be saved | Linux-VServer requires a modification of the Linux kernel |

| No new releases since 2007 |

OpenVZ/Virtuozzo 7

Version 7 of the virtualisation platform, OpenVZ from Parallels, has been available as a stand-alone Linux distribution since July 2016. The software is based on Red Hat Enterprise Linux 7 (RHEL) and enables operations of guest systems, which can be realised either as virtual machines or in the form of containers. With the new codebase, OpenVZ is moving closer to Virtuozzo 7, which is distributed by Parallels as a commercial enterprise product. A direct comparison of both virtualisation solutions can be found on the OpenVZ Wiki.

Compared to the previous version, OpenVZ 7 offers a set of new command line tools, the so-called guest tools. These enable users to perform hosting tasks directly from the host system terminal. In addition, the self-developed hypervisor for the operation of virtual machines was replaced by the established standard technologies KVM and QEMU.

When using containers, OpenVZ continues to use its own format with Virtuozzo containers. Like LXC, this primarily serves the virtualisation of complete systems (VPS) and is thus separated from Docker and rkt. However, unlike LXC, OpenVZ offers the possibility of live migration via checkpoint/restore in userspace (CRIU) to create persistent images of a running container.

System requirements and supported systems

| Required Linux kernel | RHEL7 (3.10) |

|---|---|

| Supported Linux distributions | Virtuozzo Linux, RHEL7 |

| Other platforms | None |

| Container format | Virtuozzo containers |

| Licence | OpenVZ: GNU GPL v.2, Virtuozzo 7: proprietary license |

| Programming language | C |

Technically, OpenVZ and Virtuozzo represent an extension of the Linux kernel, which provides various virtualisation tools at user level. Guest systems are implemented in so-called virtual environments (VE), which run isolated on the same Linux kernel. As with the other container technologies, the overhead is avoided by hypervisor-based hardware emulation. However, the shared Linux kernel means that all virtualised guest systems are specified to the system architecture and kernel version of the host system. The core functions of OpenVZ include dynamic real-time partitioning, resource management, and centralised management of multiple physical and virtual servers.

- Dynamic real-time partitioning: each VPS is an isolated partition of the underlying physical server. The isolation includes its own file systems, user groups (including its own root server), process trees, network addresses, and IPC objects.

- Resource management: OpenVZ allocates hardware resources via so-called resource management parameters, which are managed by the system administrator in the global configuration file and the corresponding container configuration files.

To manage virtual machines and system containers, OpenVZ and Virtuozzo rely on the Red Hat Management tool libvirt, which consists of an open source API, the libvirtd daemon, and the command line utility, virsh. While the Enterprise product, Virtuozzo, is delivered with the integrated GUI Parallels Virtual Automation (PVA), OpenVZ comes in the basic installation without a graphical user interface. However, users have the option to install it via third-party software. The OpenVZ developer team recommends the OpenVZ Web Panel from SoftUnity. Further alternatives can be found on OpenVZ Wiki.

| Advantages | Disadvantages |

|---|---|

| Parallels offers a complete Linux distribution optimised for virtualisation scenarios with OpenVZ and Virtuozzo | OpenVZ and Virtuozzo provide containers for operating complete operating systems. Anyone looking for a professional Docker alternative for isolating individual processes should choose a different platform |

| OpenVZ and Virtuozzo make it possible for virtual machines with minimal overhead to be operated in addition to system containers | The use of OpenVZ and Virtuozzo is limited to the Linux distributions RHEL7 and Virtuozzo. |

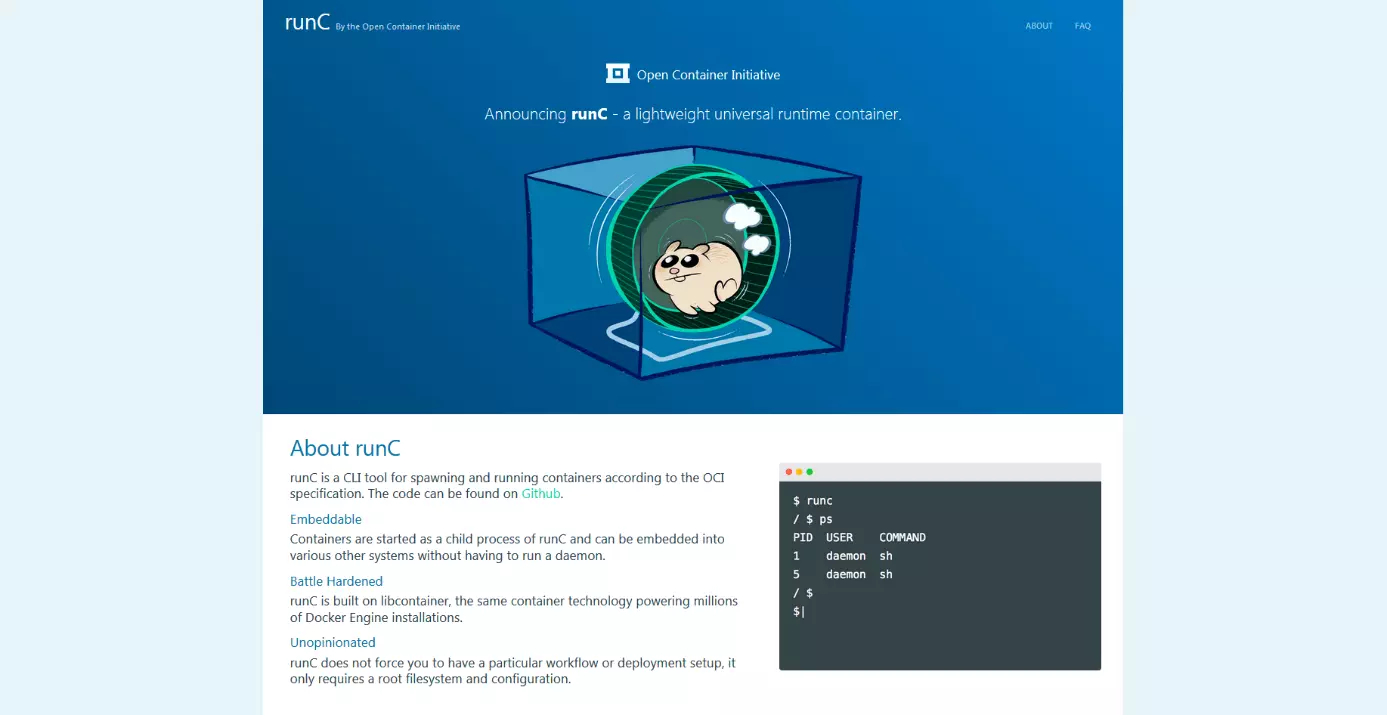

runC

runC is less a Docker alternative than a spin-off of the container runtime environment developed by Docker, turned into an independent open source project under the patronage of the Open Container Initiative (OCI).

As a non-profit organisation of the Linux foundation, Docker and other companies in the container industry launched the OCI 2015 to establish an open industry standard for software containers. Currently, the OCI provides specifications for a container runtime environment (runtime-spec) and a container image format (image-spec). The open source run-time environment, runC can be considered as a canonical implementation of these specifications.

System requirements and supported systems

| Required Linux kernel | Any Linux kernel |

|---|---|

| Supported Linux distributions | All current Linux distributions |

| Other platforms | None |

| Container format | OCI bundle |

| Licence | Apache 2.0 |

| Programming language | Go |

The runtime environment of the OCI supports only containers in the OCI bundle format and requires only a root file system and an OCI configuration file to execute containers. A tool to create root file systems for containers is not provided in the project. Users who have the Docker platform installed can, however, access their export function to extract a root file system from an existing Docker container, expand it to a config.json and therefore create an OCI bundle. In addition, other external tools such as oci-image-tools, skopeo and umoci can be used to support image creation. Like the Docker alternatives, rkt and LXC no central daemon process is used with runC. Instead, the container runtime environment is integrated with the init process, systemd.

| Advantages | Disadvantages |

|---|---|

| runC is based on the industry standard of the Open Container Initiative (OCI) | External tools are required to create container images |

A comparison of the Docker alternatives

The following table shows a comparison of all the alternatives to Docker presented in this article.

| Docker | rkt | LXC | LXD | |

|---|---|---|---|---|

| Virtualisation technologies | OS level | OS level, hypervisor | OS level | OS level |

| Full-system container | No | No | Yes | Yes |

| App container | Yes | Yes | No | No |

| Licence | Apache 2.0 | Apache 2.0 | GNU LGPLv2.1+ | Apache 2.0 |

| Container format | Docker container | appc, Docker container | Linux container (LXC) | Linux container (LXC) |

| Supported platforms | Linux, Windows, macOS, Microsoft Azure, Amazon Web Services (AWS) | Linux, Windows, macOS | Linux | Linux |

| Last release | 42826 | February 2017 | January 2017 | March 2017 |

| Linux kernel patch necessary | No | No | No | No |

| Programming language | Go | Go | C, Python 3, Shell, Lua | Go |

| Linux-VServer | OpenVZ | runC | |

|---|---|---|---|

| Virtualisation technologies | OS level | OS level, hypervisor | OS level |

| Full-system container | Yes | Yes | No |

| App container | No | No | Yes |

| Licence | GNU GPL v.2 | GNU GPL v.2 | Apache 2.0 |

| Container format | Security context | Virtuozzo containers | OCI bundle |

| Supported platforms | Linux | Linux (only Virtuozzo Linux, RHEL7) | Linux |

| Last release | 39173 | July 2016 | March 2017 |

| Linux kernel patch necessary | Yes | Stand-alone distribution | No |

| Programming language | C | C | Go |

Container technology in other operating systems

The concept of partitioning system resources by means of core isolation mechanisms and providing independently-encapsulated processes on the same system can be found in various Unix-like operating systems. Linux containers with the term 'jail' under BSD systems and the zones introduced with Solaris 10 are comparable. For Windows systems, there are the container concepts: Microsoft Drawbridge, WinDocks, Sandboxie, Turbo, and VMware ThinApp.

FreeBSD Jail

So-called jails represent one of the most distinctive security features of the Unix-like operating system, FreeBSD. A jail is an extended chroot environment that sets up a complete virtual instance of the operating system in a separate directory, and has a higher degree of isolation compared to chroot jails using Linux. Each jail has its own directory tree. In addition, the process space as well as access to user groups, network interfaces, and IPC mechanisms, is restricted. In this way, it is not possible for processes in a jail to access other directories or files outside of the isolated environment, and to affect other processes on the host system. In addition, each jail can be assigned its own hostname and its own IP address.

For each jail, independent user groups (whose rights are limited to the jail environment) can be defined via their own user management. For example, a user who has extensive privileges within a jail can’t perform critical system operations outside the virtual environment. This ensures that a hacker from a compromised jail can not cause any major damage to the system.

FreeBSD is a free, open source version of the Berkeley Software Distribution (BSD), a variant of the Unix operating system developed at the University of California in Berkeley in 1977.

| Advantages | Disadvantages |

|---|---|

| Optimised for full-system virtualisation | Isolation of individual processes (like Docker) is not supported |

| Virtualisation via jail requires a BSD system (NetBSD, FreeBSD, and OpenBSD) |

Oracle Solaris Zones

With Solaris, a number of shielded run-time environments can also be set up within an operating system installation that. These are known as zones and share the common operating system kernel. A distinction is made between global and non-global zones.

- Global zones: each Solaris installation includes a global zone that acts as a standard operating system for administration. In the global zone, all processes of the system are running, unless they have been outsourced to non-global zones.

- Non-global zones: these zones are separated virtual environments that are created within the global zone of a Solaris installation. The isolation of individual non-global zones is similar to FreeBSD based on a modified chroot jail. Each zone is assigned its own hostname and virtual network card. Resource shares of the underlying hardware are either allocated via fair-share scheduling or fixed as part of resource management.

Similar to other approaches to virtualisation on an operating system level, Solaris zones provide a resource-saving way to implement various isolated operating systems on a common system instance.

| Advantages | Disadvantages |

|---|---|

| Implementing the Solaris zones natively enables a very effective operation of virtual environments with minimal overhead | Using Solaris zones requires the proprietary operating system Oracle Solaris or its open source version, OpenSolaris |

Container technology for Windows

Since the integration of a native Docker port in Windows Server 2016, container technology has also entered the Microsoft universe. To this end, the Windows kernel was expanded in close collaboration with the Docker development team to include functionalities similar to control groups and namespaces under Linux, enabling a resource-saving virtualisation on an operating system level.

The native Docker engine for Windows Server 2016 differs from the Docker for Windows and Docker for Mac applications. While the latter allows the operation of the Docker engine developed for Linux using a lean virtual machine, the Docker version for Windows Server 2016 uses the Windows kernel directly. Classic Docker containers can’t be run on Windows Server 2016. Instead, a new container format called WSC (Windows Server Container) was developed as part of the Docker project. In addition, Microsoft offers a Hyper-V-based container variant that allows better isolation.

- Windows Server Container: with Windows Server Containers (WSC), Microsoft provides a container technology for the Docker platform, which enables process with advanced Windows Kernel functions to be isolated. As with Linux technology, Windows server containers share the underlying host system (container host)’s kernel.

- Hyper-V-Container: with Hyper-V Containers, Microsoft provides a way to shield container instances by using traditional hypervisor-based virtualisation. Hyper-V Containers are virtual machines that can be controlled via the Docker platform, just like Windows Server Containers, but have a much higher degree of isolation with their own Windows kernel.

Even before Microsoft has implemented native container technologies in the Windows kernel as part of the Docker collaboration, various developers have dealt with resource-saving virtualisation methods for Windows systems. Microsoft Drawbridge, WinDocks, Sandboxie, Turbo, and VMware are among the more well-known projects.

Microsoft Drawbridge

Under the name Drawbridge, Microsoft developed the prototype of a visualisation technology, based on the concept of the Library OS – an operating system implemented as a set of libraries within an application. With Drawbridge, applications are run together with the Library OS in so-called Pico processes. These are process-based isolation containers with a kernel interface. In the Windows server container documentation, Microsoft specifies the Drawbridge experience as an important input for container server technology development for Windows Server 2016.

WinDocks

WinDocks is a Docker port for Windows, which is used to create and manage app containers for .NET applications and data containers for SQL servers. Unlike Windows server containers that are currently limited to Windows 10 and Windows Server 2016 systems, WinDocks is also available for older operating systems such as Windows Server 2012, Windows Server 2012 R2, as well as Windows 8 and 8.1. The software is offered for free as the Community edition and as an Enterprise product with customer support.

Sandboxie

Using Sandboxie, applications can run on Windows in an isolated environment called Sandbox. Similar to container technology, this method aims to shield the underlying host system and other applications from program activities of isolated applications. For this purpose, the tool switches between the application and the operating system in order to intercept hard disk write accesses, and redirect them to a protected area. In addition to accessing files, this also prevents write requests from the Windows registry database. Sandboxie is available for all current versions of Windows, as well as for XP and Vista as a free basic version. In addition, fee-based versions with extended functional spectrums are available for private users and commercial use.

Turbo (formerly Spoon)

Turbo is a Docker alternative for Windows, which packs applications and all their dependencies such as .NET, Java, or databases such as SQL Server or MongoDB in isolated containers. However, unlike Windows server containers, these are not natively supported by the Windows kernel, which is why a virtual machine (similar to Docker for Windows) is needed to compensate for inconsistencies. Turbo containers therefore run on the Spoon Virtual Machine Engine (SVM), which acts as an interface to the Windows kernel. The exchange of container applications also takes place at Turbo via a cloud-based hub. The software is well documented, but doesn’t receive as much attention compared to Docker.

VMware ThinApp

VMware ThinApp is a tool for application virtualisation in a desktop environment. The software makes it possible to provide conflict-free applications in complex IT infrastructures. For this purpose, these, including all dependencies, are packaged in an executable EXE or MSI file and therefore isolated from the underlying operating system and other applications. The file created by ThinApp can be run on any Windows system without the need for installation (and therefore without admin rights) – optionally also from a portable storage medium (e.g. a USB flash drive). An alternative to Docker is the ThinApp when migrating legacy applications or isolating critical programs.