Apache Hadoop

Big Data: the buzzword for massive amounts of data of our increasingly digitised lives. Companies around the world are busy developing more efficient methods for compiling electronic data on a massive scale and saving this on enormous storage architectures where it’s then systematically processed. Masses of data measured in petabytes or exabytes are therefore no longer a rarity these days. But no single system is able to effectively process volumes of data of this magnitude. For this reason, Big Data analyses require software platforms that make it possible to distribute complex computing tasks onto a large number of different computer nodes. One prominent solution is Apache Hadoop, a framework that provides a basis for several distributions and Big Data suites.

- Cost-effective vCPUs and powerful dedicated cores

- Flexibility with no minimum contract

- 24/7 expert support included

What is Hadoop?

Apache Hadoop is a Java-based framework used for a diverse range of software components that makes it possible to separate tasks (jobs) into sub processes. These are divided onto different nodes of a computer cluster where they are then able to be simultaneously run. For large Hadoop architectures, thousands of individual computers are used. This concept has the advantage that each computer in the cluster only has to provide a fraction of the required hardware resources. Working with large quantities of data thus does not necessarily require any high-end computers and instead can be carried out through a variety of cost-effective servers.

The open source project, Hadoop, was initiated in 2006 by the developer, Doug Cutting, and can be traced back to Google’s MapReduce algorithm. In 2004, the search engine provider published information on a new technology that made it possible to parallelise complex computing processes on the basis of large data quantities with the help of a computer cluster. Cutting, who’d spent time at Excite, Apple Inc. and Xerox Parc and already had made a name for himself with Apache Lucene, soon recognised the potential of MapReduce for his own project and received support from his employer at the time, Yahoo. In 2008, Hadoop became Apache Software Foundation’s top-level project, and the framework finally achieved the release status 1.0.0 in 2011.

In addition to Apache’s official release, there are also different forks of the software framework available as business-appropriate distributions that are provided to customers of various software providers. One form of support for Hadoop is offered through Doug Cutting’s current employer, Cloudera, which provides an ‘enterprise-ready’ open source distribution with CDH. Hortonworks and Teradata feature similar products, and Microsoft and IBM have both integrated Hadoop into their respective products, the cloud service Azure and InfoSphere Biglnsights.

Hadoop Architecture: set-up and basic components

Generally, when one refers to Hadoop, this means an extensive software packet —also sometimes called Hadoop ecosystem. Here, the system’s core components (Core Hadoop) as well as various extensions are found (many carrying colorful names like Pig, Chukwa, Oozie or ZooKeeper) that add various functions to the framework for processing large amounts of data. These closely related projects also hail from the Apache Software Foundation.

Core Hadoop constitutes the basis of the Hadoop ecosystem. In version 1, integral components of the software core include the basis module Hadoop Common, the Hadoop Distributed File System (HDFS) and a MapReduce Engine, which was replaced by the cluster management system YARN (also referred to as MapReduce 2.0) in version 2.3. This set-up eliminates the MapReduce algorithm from the actual management system, giving it the status of a YARN-based plugin.

Hadoop Common

The module Hadoop Common provides all of the other framework’s components with a set of basic functions. Among these are:

- Java archive files (JAR), which are needed to start Hadoop,

- Libraries for serialising data,

- Interfaces for accessing the file system of the Hadoop architecture as well as the remote- procedure-call communication located inside the computer cluster.

Hadoop Distributed File System (HDFS)

HDFS is a highly available file system that is used to save large quantities of data in a computer cluster and is therefore responsible for storage within the framework. To this end, files are separated into blocks of data and are then redundantly distributed to different nodes; this is done without any predefined organisational scheme. According to the developers, HDFS is able to manage files numbering in the millions.

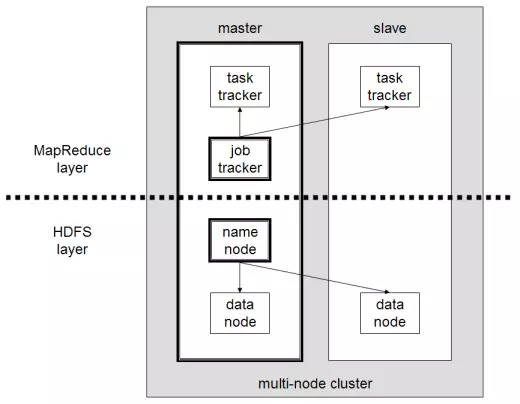

The Hadoop cluster generally functions according to the master/slave model. The architecture of this framework is composed of a master node to which numerous subordinate ‘slave’ nodes are assigned. This principle is found again in the HDFS structure, which is based on a NameNode and various subordinate DataNodes. The NameNode manages all metadata for the file system and for the directory structures and files. The actual data storage takes place on the subordinate DataNodes. In order to minimise data loss, these files are separated into single blocks and saved multiple times on different nodes. The standard configuration is organised in such a way that each data block is saved in triplicate.

Every DataNode sends the NameNode a sign of life, known as a ‘heartbeat’, in regular intervals. Should this signal fail to materialise, the NameNode declares the corresponding slave to be ‘dead’, and with the help of the data copies, ensures that enough copies of the data block in question are available in the cluster. The NameNode occupies a central role within the framework. In order to keep it from becoming a ‘single point of failure’, it’s common practice to provide this master node with a SecondaryNameNode. This is responsible for recording any changes made to meta data, making it possible to restore the HDFS’ centrally controlled instance.

During the transition phase from Hadoop 1 to Hadoop 2, HDFS added a further security system: NameNode HA (high availability) adds another failsafe mechanism to the system that automatically starts a backup component whenever a NameNode crash occurs. What’s more, a snapshot function enables the system to be set back to its prior status. Additionally, the extension, Federation, is able to operate multiple NameNodes within a cluster.

MapReduce-Engine

Originally developed by Google, the MapReduce algorithm, which is implemented in the framework as an autonomous engine in Hadoop Version 1, is a further main component of the Core Hadoop. This engine is primarily responsible for managing resources as well as controlling and monitoring computing processes (job scheduling/monitoring). Here, data processing largely relies on the phases ‘map’ and ‘reduce’, which make it possible to directly process data at the data locality. This decreases the computing time and network throughput. As a part of the map phase, complex computing processes (jobs) are separated into individual parts and then distributed by a so-called JobTracker (located on the master node) to numerous slave systems in the cluster. There, TaskTrackers ensure that the sub processes are processed in a parallelised manner. In the subsequent reduce phase, the interim results are collected by the MapReduce engine and then compiled as one single overall result.

While Master Nodes generally contain the components NameNode and JobTracker, a DataNode and TaskTracker work on each subordinate slave. The following graphic displays the basic structure of a Hadoop architecture (according to version 1) that’s split into MapReduce layers and HDFS layers.

With the release of Hadoop version 2.3, the MapReduce engine was fundamentally overhauled. The result is the cluster management system YARN/MapReduce 2.0, which decoupled resource and task management (job scheduling/monitoring) from MapReduce and so opened the framework to a new processing model and a wide range of Big Data applications.

YARN/MapReduce 2.0

With the introduction of the YARN module (‘Yet Another Resource Negotiator’) starting with version 2.3, Hadoops architecture went through a fundamental change that marks the transition from Hadoop 1 to Hadoop 2. While Hadoop 1 only offers MapReduce as an application, it enables resource and task management to be decoupled from the data processing model, which allows a wide variety of Big Data applications to be integrated into the framework. Consequently, MapReduce under Hadoop 2 is only one of many possible applications for accessing data that can be executed in the framework. This means that the framework is more than a simple MapReduce runtime environment; YARN assumes the role of a distributed operating system for resource management of Big Data applications.

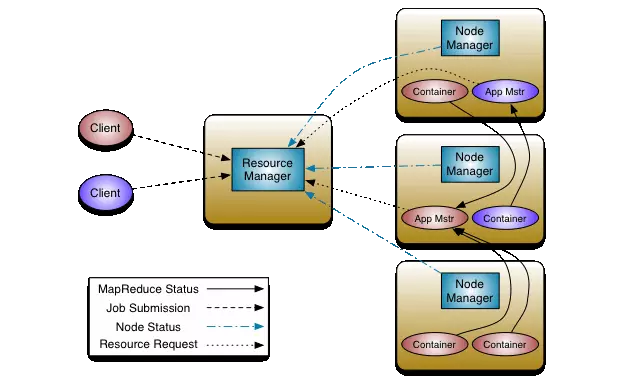

The fundamental changes to Hadoop architecture primarily affect both trackers of the MapReduce engine, which no longer exist in Hadoop 2 as autonomous components. Instead, the YARN module relies on three new entities: the ResourceManager, the NodeManager, and the ApplicationMaster.

- ResourceManager: the global ResourceManager acts as the highest authority in the Hadoop architecture (Master) that’s assigned various NodeManagers as ‘slaves’. Its responsibilites include controlling computer clusters, orchestrating the distribution of resources onto the subordinate NodeMangers, and distributing applications. The ResourceManager knows where the individual slave systems within the cluster are found and which resources these are able to provide. The ResourceScheduler is one particularly important component of the ResourceManger; this decides how available resources in the cluster are distributed.

- NodeManager: there is a NodeManager located on each of the computer cluster’s nodes. This takes in the slave’s position in Hadoop 2’s infrastructure and in this way acts as a command recipient of the ResourceManager. When a NodeManager is started on a node in the cluster, it then registers with the ResourceManager and sends a ‘sign of life’, the heartbeat, in regular intervals. Each NodeManager is responsible for the resources of its own nodes and provides the cluster with a portion of these. How these resources are used is decided by the ResourceManager’s ResourceScheduler.

- ApplicationMaster: each node within the YARD system contains an ApplicationMaster that requests resources from the ResourceManager and is allocated these in the form of containers. Big Data applications from the ApplicationMaster are executed and observed on these containers.

Here’s a schematic depiction of the Hadoop 2 architecture:

Should a Big Data application need to be executed on Hadoop 2, then there are generally three actors involved:

- A client

- A ResourceManager and,

- One or more NodeManagers.

First off, the client issues the ResourceManager an order, or job, that’s to be started by a Big Data application in the cluster. This then allocates a container. In other words: the ResourceManager reserves the cluster’s resources for the application and contacts a NodeManager. The contacted NodeManager starts the container and executes an ApplicationMaster within it. This latter component is responsible for monitoring and executing the application.

The Hadoop ecosystem: optional expansion components

In addition to the system’s core components, the Hadoop ecosystem encompasses a wide range of extensions that facilitate separate software projects and make substantial contributions to the functionality and flexibility of the software framework. Due to the open source code and numerous interfaces, these optional add-on components can be freely combined with the system’s core functions. The following shows a list of the most popular projects in the Hadoop ecosystem:

- Ambari: the Apache project Ambari was initiated by the Hadoop distributer Hortonworks and adds installation and management tools to the ecosystem that facilitate providing IT resources and managing and monitoring Hadoop components. To this end, Apache Ambari offers a step-by-step wizard for installing Hadoop services onto any amount of computers within the cluster as well as a management function with which services can be centrally started, stopped, or configured. A graphical user interface informs users on the status of the system. What’s more, the Ambari Metrics System and the Ambari Alert Framework enable metrics to be recorded and alarm levels to be configured.

- Avro: Apache Avro is a system for serialising data. Avro uses JSON in order to define data types and protocols. The actual data, on the other hand, is serialised in a compact binary format. This serves as a data transfer format for the communication between the different Hadoop nodes as well as the Hadoop services and client programmes.

- Cassandra: written in Java, Apache Cassandra is a distributed database management system for large amounts of structured data that follows a non- relational approach. This kind of software is also referred to as NoSQL databases. Originally developed by Facebook, the goal of the open source system is to achieve high scalability and reliability in large, distributed architectures. Storing data takes place on the basis of key-value relations.

- HBase: HBase also is an open source NoSQL database that enables real-time writing and reading access of large amounts of data within a computer cluster. HBase is based on Google’s high-performance database system, BigTable. In comparison to other NoSQL databases, HBase is characterised by high data consistency.

- Chukwa: Chukwa is a data acquisition and analysis system that relies on the HDFS and the MapReduce of the Hadoop Big Data framework; it also allows real-time monitoring as well as data analyses in large, distributed systems. In order to achieve this, Chukwa uses agents that run on every observed node and collect log files of the applications that run there. These files are sent to so-called collectors and then saved in the HDFS.

- Flume: Apache Flume is another service that was created for collecting, aggregating, and moving log data. In order to stream data for storage and analysis purposes from different sources onto HDFS, flume implements transport formats like Apache Thrift or Avro.

- Pig: Apache Pig is a platform for analysing large amounts of data that the high-level programming language, Pig Latin, makes available to Hadoop users. Pig makes it possible to describe the flow of MapReduce jobs on an abstract level. Following this, MapReduce requests are no longer created in Java; instead, they’re programmed in the much more efficient Pig Latin. This makes managing MapReduce jobs much simpler. For example, this language allows users to understand the parallel execution of complex analyses. Pig Latin was originally developed by Yahoo. The name is based on the approach of the software: like an ‘omnivore’, Pig is designed to process all types of data (structured, unstructured, or relational).

- Hive: with Apache Hive, a data warehouse is added to Hadoop. These are centralised data bases employed for different types of analyses. The software was developed by Facebook and is based on the MapReduce framework. With HiveQL, Hive is endowed with a SQL-like syntax that makes it possible to call up, compile, or analyse data saved in HDFS. To this end, Hive automatically translates SQL-like requests into MapReduce Jobs.

- HCatalog: a core component of Apache Hive is HCatalog, a meta data and chart management system that makes it possible to store and process data independently of both format or structure. To do this, HCatalog describes the data’s structure and so makes use easier through Hive or Pig.

- Mahout: Apache Mahout adds easily extensible Java libraries that can be used for data mining and mathematic applications for machine learning to the Hadoop ecosystem. Algorithms that can be implemented with Mahout in Hadoop enable operations like classifications, clustering, and collaborative filtering. When applied, Mahout can be used, for instance, to develop recommendation services (customers who bought this item also bought…).

- Oozie: the optional workflow component, Oozie, makes it possible to create process chains, automate these, and also have them executed in a time controlled manner. This allows Oozie to compensate for deficits in Hadoop 1’s MapReduce engine.

- Sqoop: Apache Sqoop is a software component that facilitates the import and export of large data quantities between the Hadoop Big Data framework and structured data storage. Nowadays, company data is generally stored within relational databases. Sqoop makes it possible to efficiently exchange data between these storage systems and the computer cluster.

- ZooKeeper: Apache ZooKeeper offers services for coordinating processes in the cluster. This is done by providing functions for storing, distributing, and updating configuration information.

Hadoop for businesses

Given that Hadoop clusters can be set up for processing large data volumes with the help of standard PCs, the Big Data framework has become a popular solution for numerous companies. Some of these include the likes of Adobe, AOL, eBay, Facebook, Google, IBM, LinkedIn, Twitter, and Yahoo. In addition to the possibility of being able to easily save and simultaneously process data on distributed architecture, Hadoop’s stability, expandability, and extensive range of functions are further advantages of this open source software.