Self-learning systems: how does machine learning work?

Artificial intelligence is an important part of the digitalisation process, which is having a lasting effect on our society. What once belonged to the realm of science fiction a few years ago, has now become reality: we talk to computers, we use smartphones to show us the fastest way to the nearest gas station, and our watches know whether we’ve taken enough steps in the day. Technology is becoming more and more intelligent as scientists, engineers, and programmers teach computers to learn independently.

Machine learning is not only interesting for science and IT companies such as Google or Microsoft, the world of online marketing can also change due to the development of artificial intelligence. In the following text, we will explain how artificial intelligence (AI) has developed over the past few years, what exactly can be understood by machine learning, which machine learning methods exist, and why marketers should rely on self-learning systems.

- Get online faster with AI tools

- Fast-track growth with AI marketing

- Save time, maximise results

History of the self-learning system

Robots and machines have been occupying mankind for several centuries. Authors of romantic novels have already dealt with artificial intelligence, and even today robots in books, films, and computer games still fascinate us. The relationship between humans and thinking machines has always wavered between fear and fascination. Actual efforts towards machine learning, however, did not begin until the 1950s, at a time when computers were still in their infancy and artificial intelligence could only be dreamed of. In the two centuries prior to that, theoreticians such as Thomas Bayes, Adrien-Marie Legendre, and Pierre-Simon Laplace had already laid the foundations for later research, but it was only with the work of Alan Turing that the idea of adaptive machines became concrete.

“In such a case one would have to admit that the progress of the machine had not been foreseen when its original instructions were put in. It would be like a pupil who had learnt much from his master, but had added much more by his own work. When this happens I feel that one is obliged to regard the machine as showing intelligence.”

Alan Turing at a lecture in 1947. (Quoted according to B. E. Carpenter and R. W. Doran (eds.), A. M. Turing's Ace Report of 1946 and Other Papers)

In 1950, Turing developed the Turing Test, a kind of game in which a computer pretends to be human. If the test subject doesn’t realise that they aren’t speaking to an actual person, the machine has passed the test. At that time, it wasn’t very developed, but just two years later Arthur Samuel developed a computer that could play draughts – and it got better with every game. The program had the ability to learn. Finally, in 1957, Frank Rosenblatt developed the Perceptron, which was one of the first artificial neural networks.

From then on, scientists began to trust their computers even more with more complex thinking tasks. The machines mastered these tasks sometimes really well and sometimes not so well. In the meantime, large companies have become one of the driving forces behind the development of machine learning: IBM developed Watson, a computer that possesses an immense knowledge repository and can answer questions posed in natural language. The computer was also used for the well-known TV show 'Jeopardy' and ended up winning! (The event was similar to the 1997 chess competition between world champion Garri Kasparov and another IBM computer, Deep Blue. The machine won in this instance too).

Google and Facebook use machine learning to better understand their users and provide them with more features. Facebook’s DeepFace can now identify faces on images with 97 % accuracy. With its Google Brain Project, the search engine giant has already significantly improved the Android operating system’s speech recognition feature as well as photo search on Google+ and video recommendations on YouTube.

What is machine learning?

Basically, machines, computers, and programs only work as they have been programmed: 'When case A occurs, you do B'. However, our expectations of modern computer systems are increasing and programmers cannot predict all conceivable cases and prepare their computer for them. It is therefore necessary for the software to make independent decisions and react appropriately to unknown situations. Algorithms are required to enable the program to learn. This means that they are first fed with data to understand and make associations later.

In connection with self-learning systems, related terms often emerge that you need to be familiar with to have a better understanding of machine learning.

Artificial intelligence

The research surrounding artificial intelligence (AI) aims to create machines that can act like humans: computers and robots are supposed to analyse their environment to make the best possible decision. They should behave as intelligently as possible – according to our standards. This is also a problem, since we aren’t certain which criteria to use to judge intelligence. At present, AI can’t simulate the complete human being (including emotional intelligence). Instead, partial aspects are isolated to cope with specific tasks. This is commonly referred to as 'weak artificial intelligence'.

Neural networks

A branch of research on artificial intelligence, neuroinformatics, aims to design computers based as closely as possible on the brain. This branch regards the nervous system as abstract i.e. freed from its biological properties and limited to its mode of operation. Artificial neural networks are primarily mathematical, abstract methods, rather than actual manifestations. It’s like a network of neurons (mathematical functions or algorithms), which can cope with complex tasks, just like a human brain. The strands between the neurons vary in strength and can adapt to problems.

Big Data

The term 'Big Data' simply means that a huge amount of data is involved. However, there is no defined point where data becomes big data. The fact that this phenomenon has attracted increasing media attention in recent years is due to where this data comes from: in many cases, the flood of information is created from user data (interests, movement profiles, vital data), collected by companies such as Google, Amazon, and Facebook in order to tailor offers more precisely to customers. Data volumes like these can no longer be satisfactorily evaluated by traditional computer systems: conventional software only finds what the user is looking for. For this reason, self-learning systems are required that can uncover previously unknown connections.

Data mining

Data mining is the name given to the analysis of Big Data. You can’t do much with just collected data: it only becomes interesting when relevant characteristics are extracted and evaluated – similar to gold mining. Data mining distinguishes itself from machine learning by the fact that the former is primarily concerned with how recognised patterns are used, and the latter with finding new patterns.

Different machine learning methods

Basically, developers differentiate between supervised learning and unsupervised learning, with gradual intermediate stages. The algorithms used are very different. Supervised learning provides the system with examples. The developers specify the value of the respective information, for example, whether it belongs in category A or B. The self-learning system draws conclusions from this, recognises patterns, and can then deal with unknown data better. The aim is to minimise the error rate as much as possible.

A well-known example of supervised learning are spam filters: the system uses features to decide whether an e-mail should be sent to the inbox or moved to the spam folder. If the system makes a mistake, you can readjust manually, and the filter will adjust its future calculations accordingly. This way, the software achieves continuously better results. Filter programs like these are based on Bayesian spam filtering (from the probability theory) and are therefore also called Bayesian filters (also known as Bayes filters).

Unsupervised learning doesn’t need a 'teacher' unlike supervised learning where one is needed to indicate what belongs where and gives feedback on autonomous decisions for the system. Instead, the program tries to recognise patterns by itself. For example, by using clustering: an element is selected from the data, examined for its characteristics, and then compared with the elements that have already been examined. If equivalent elements have already been examined, the current object will be added to it. If this is not the case, it will be stored in isolation.

Systems based on unsupervised learning are implemented in neural networks. Application examples can be found in network security: a self-learning system detects abnormal behaviour. For example, since a cyber-attack cannot be assigned to a known group, the program can detect the threat and raise the alarm.

In addition to these two main areas, there is also semi-supervised learning, reinforcement learning, and active learning: these three methods are more closely related to supervised learning and differ in the type and extent of user participation.

In addition, a distinction is made between shallow learning and deep learning. While the former is a relatively simple method, the results of which are rather superficial, deep learning is about more complex data sets. These are more complicated because they are made up of natural information e.g. information that occurs during speech, handwriting, or facial recognition. Natural data is easy for humans to process, but not for a machine, because it is mathematically difficult to grasp.

Deep learning and artificial neural networks are closely related. The way in which neural networks are trained can be described as deep learning. It is called deep because the network of neurons is arranged in several hierarchical levels. The first level begins with a layer of entry neurons. They record the data, begin their analysis, and send their results to the next neural node. At the end, the increasingly refined information reaches the initial level and the networks issues a value. The numerous levels located between the entrance and the exit are called hidden layers.

Google Image Search can be used as an example to show deep learning. The network behind the search algorithm displays only images of cats when you’ve searched for cats, for example. This works because Google’s self-learning system can recognise objects within the image. When Google adds a new image to its catalogue, the system’s entry neurons process the data (images consist of purely numbers for computers).

As it runs though the layers, the network filters out the necessary information until it can decide which objects are visible on the image e.g. a cat. During the training phase, the developers assign a category for each image so that the system can learn. If the machine then delivers incorrect results, such as images of dogs instead of cats, the developers can adapt the individual neurons. Like our brain, they have different weightings and threshold levels that can be adjusted in a self-learning system.

How does machine learning work for marketing?

Machine learning already has important functions for marketing. At the moment, however, it is primarily large companies that use the functions internally, such as Google. Self-learning systems are still so new that they cannot simply be purchased as an out-of-the-box solution. Instead, popular internet providers develop their own systems and are therefore the driving force in this sector. However, as some are open source and work with independent research despite commercial interest, developments in the field are progressing even faster. In addition to its creative side, marketing has always had an analytical aspect: Statistics on customer behaviour (purchase behaviour, number of visitors to a website, app usage, etc.) play a major role in deciding which specific advertising measures to use. The more data you have, the more information can usually be drawn from it. Intelligent programs are needed to process such a large amount of features. This is where self-learning systems come into play: Computer programs have been taught to recognise patterns and can make well-founded predictions, which is otherwise quite limiting for people who tend to be biased when it comes to data. An analyst usually approaches measured data with certain expectations. These biases are difficult for people to not have beforehand and can often lead to disappointment with the results. The greater the amount of data an analyst processes, the greater the deviation is likely to be. Although intelligent machines can also be biased. Self-learning systems also improve and facilitate the way that analysis results are presented: Automated Data Visualisation is a technique in which the computer automatically selects the best way of presenting the data and information. This is particularly important so that people can understand what the machine has found out and predicted. With so much data, it becomes difficult to display the results yourself. Therefore, it makes sense for the computer to present the results. Machine learning can also have an influence on how content is created – the keyword here is generative design. Instead of designing the same customer journey for all users (i.e. the steps the customer takes to purchase a product or service), dynamic systems can create individual experiences based on machine learning. The content displayed to the user on a website is still provided by copywriters and designers, but the system integrates the components specifically for the user. In the meantime, self-learning systems are also being used to design by themselves: with the project Dreamcatcher, it’s possible to have components designed by a machine. Machine Learning can also be used to improve chatbots, for example. Many companies already use programs that handle part of the customer support using a chatbot. But in many cases, users get quickly annoyed by the automatic operators: The capabilities of current chatbots are usually very limited and the response options are based on databases that are manually maintained. A chatbot based on a self-learning system with good speech recognition (NLP) can give customers the feeling that they are communicating with a real person – and therefore pass the Turing test. Amazon or Netflix have made another important development when it comes to machine learning for marketers: recommendations. A major factor for the success of these providers is to predict what the user wants next. Depending on the data collected, the self-learning systems can recommend additional products to the user. What was previously only possibly on a large and not-so-personal scale ('Our customers like product A, which means they will like product B'), is now also possible on a small scale thanks to modern programs ('Customer X has liked products A, B, and C, which is why they will probably like product D'). In summary, self-learning systems will influence online marketing in four important ways:

- Quantity: Programs that work with machine learning and have been well trained can process large amounts of data and make predictions for the future. Marketing experts draw conclusions from the success or failure of campaigns this way.

- Speed: Analyses take time – if you have to do them by hand. Self-learning systems increase the working speed and allow you to react more quickly to changes.

- Automation: Machine learning makes it easier to automate operations. Since modern systems can independently adapt to new conditions with the help of machine learning, complex automations processes are also possible.

- Individuality: Computer programs can serve countless customers. Since self-learning systems collect and process data from individual users, they can also provide comprehensive support to these customers. Individual recommendations and specially-developed customer journeys help marketing measures to be more effective.

Other areas of application for self-learning systems

While machine learning is already increasingly being used in marketing, self-learning systems are gaining ground in many other areas of our lives. In some cases, these help in science and technology by furthering the progress. In some cases, however, they are also used in the form of sometimes larger, sometimes smaller gadgets aimed to simplify our everyday life. The different uses presented in this article are only examples. It can be assumed that machine learning will affect our entire lives in the not-too-distant future.

Science

What applies to marketing is even more important in science. The intelligent processing of Big Data is an enormous relief for empirically working scientists. Particle physicists, for example, can use self-learning systems to record and process much more measured data and therefore detect deviations. Machine learning also helps in medicine: even today, some doctors use artificial intelligence for diagnosis and treatment. Machine learning is also used for forecasting illnesses such as diabetes and heart attacks.

Robots

Robots are everywhere, especially in factories. They help, for example, with mass production if they are programmed to carry out consistent work steps. However, they often have little to do with intelligent systems, since they are only programmed to do the work they perform. If self-learning systems are used in robotics, these machines should also be able to master new tasks. These developments are, of course, also very interesting for other areas: from space travel to the home, robots with artificial intelligence will be used in numerous areas.

Traffic

One of machine learning’s big flagship products is the self-driving or autonomous car. Vehicles can manoeuver themselves through actual traffic without causing accidents. This can only be achieved through machine learning, since it isn’t possible to program all situations that could possibly occur. For this reason, it is imperative for cars to be able to navigate themselves using intelligent machines. It’s not just self-driving cars that can be revolutionised by self-learning systems in the transport sector: intelligent algorithms e.g. in the form of artificial neural networks, can analyse traffic and develop more effective traffic management systems such as traffic light controls.

Internet

Machine learning already plays a major role on the internet. Spam filters have already been mentioned: through constant learning, filters for unwanted e-mails are becoming better and better and are more reliable when it comes to banishing spam from your inbox. The same goes for intelligent defense measures against viruses and malware that protect computers from malicious software more effectively. Search engine ranking algorithms – especially Google’s RankBrain – are also self-learning systems. Even if the algorithm doesn’t know what to do with the user’s input (because no-one has searched for it yet), it can make a guess about what should fit the request.

Personal assistants

Computer systems that are able to constantly learn also play an important role in your home. This is how simple homes become smart homes. For example, Moley Robotics is developing an intelligent kitchen, which uses its mechanical arms to prepare meals. Personal assistants such as Google Home and Amazon Echo [Google Home vs. Amazon Echo – ein Vergleich] (https://www.ionos.de/digitalguide/online-marketing/web-analyse/google-home-vs-amazon-echo-ein-vergleich/) also use machine learning technologies to help them better understand their users. But many people now carry their assistants with them at all times: With Siri, Cortana, or Google Assistant, users can use voice control to send commands and questions to their smartphones.

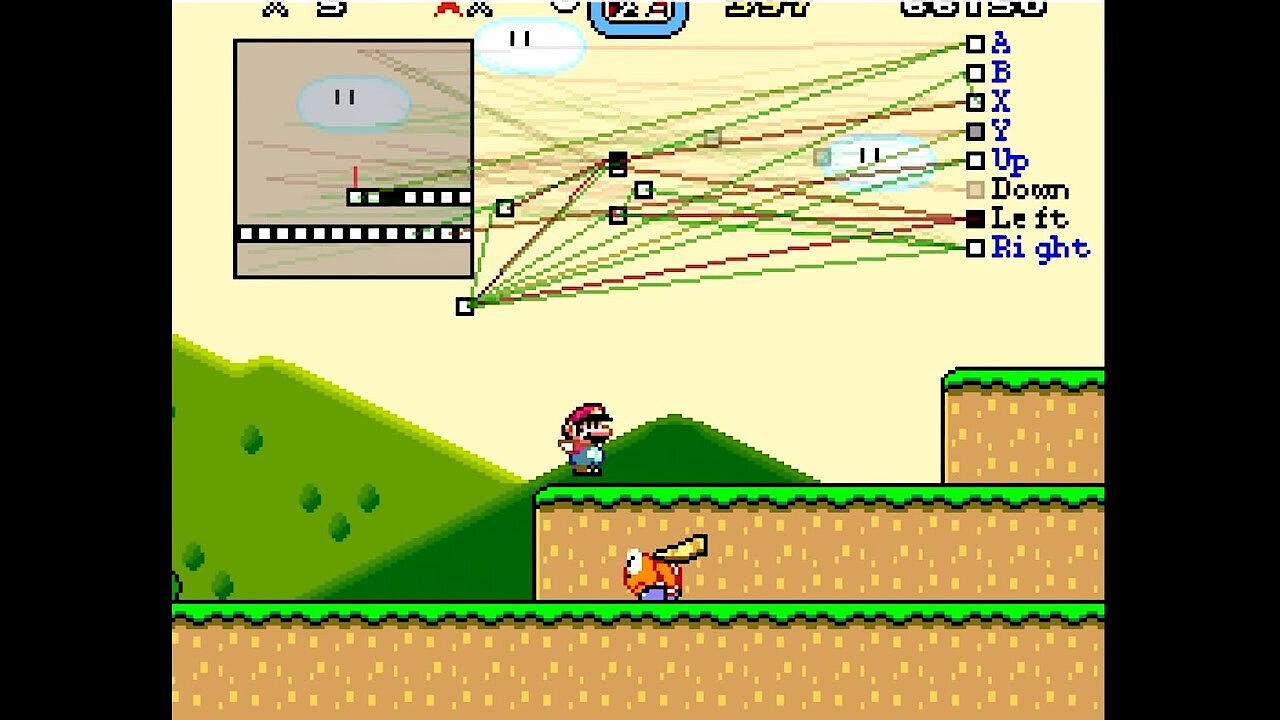

Games

Since research began on artificial intelligence, scientists have been greatly motivated by how well machines are able to play games. Self-learning systems pitted themselves against humans in games of chess, draughts, and Go from China (probably the most complex board game in the world). Computer game developers also use machine learning to make their games more interesting. Game designers can use machine learning to create the most balanced gameplay possible and ensure that computer opponents intelligently adapt to human player behaviour.