What is semi-supervised learning?

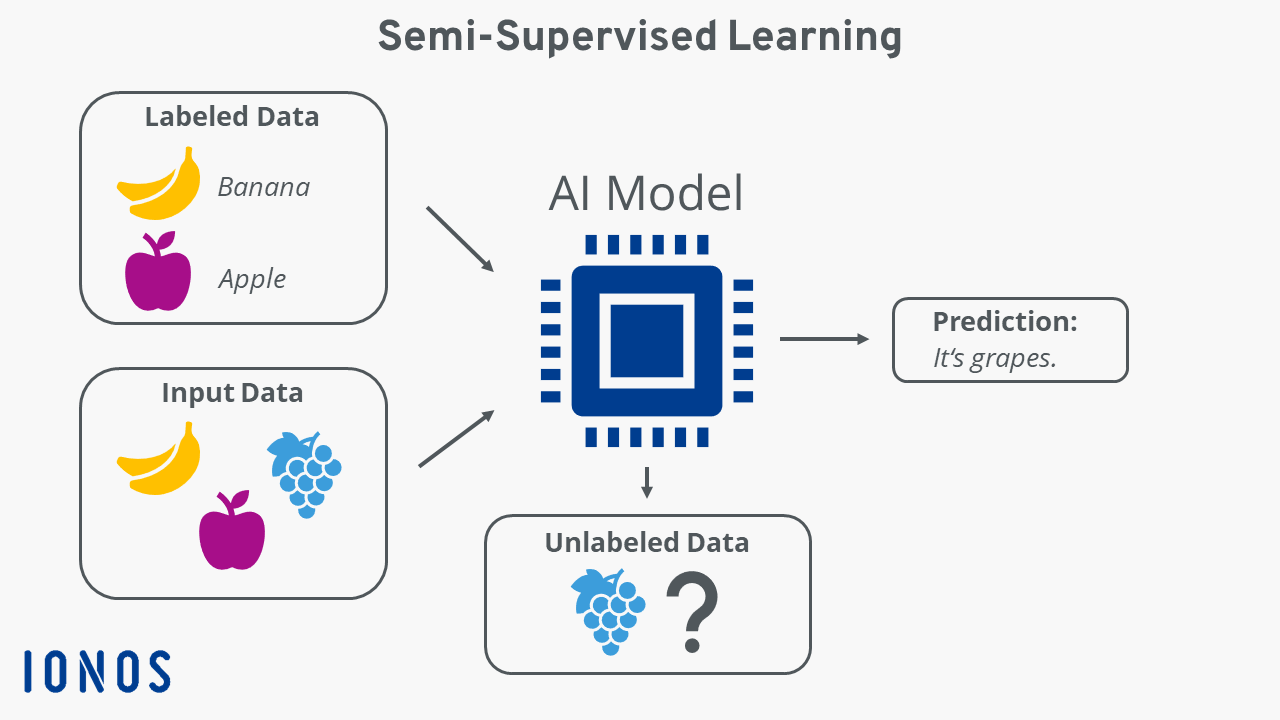

In semi-supervised learning, a model is trained using both labelled and unlabelled data. With this type of machine learning, the algorithm learns to recognise patterns in data using a small number of data points and without knowing the target variables for the unlabelled data. This approach results in a model that is more accurate and more efficient.

What does semi-supervised learning mean?

Semi-supervised learning is a hybrid approach in machine learning that combines the strengths of supervised and unsupervised learning. With this method, a small amount of labelled data is used together with a much larger amount of unlabelled data to train AI models. This setup lets the algorithm find patterns in the unlabelled data by using the labelled data as a guide, resulting in a model that better understands the structure of the unlabelled data, leading to more accurate predictions.

- Get online faster with AI tools

- Fast-track growth with AI marketing

- Save time, maximise results

What key assumptions are there in semi-supervised learning?

Algorithms designed for semi-supervised learning operate on a few main assumptions about the data:

- Continuity assumption: Data points that are close together are likely to have the same output label.

- Cluster assumption: Data tends to fall into distinct clusters, and points within the same cluster usually share the same output label.

- Manifold assumption: Data lies near a manifold (a connected set of points) that has a lower dimension than the input space. This assumption allows for the use of distances and densities within the data.

How is it different from supervised and unsupervised learning?

Supervised, unsupervised and semi-supervised learning are all important approaches in machine learning, but each trains AI models in a different way. Here’s a quick breakdown of how semi-supervised learning differs from its traditional counterparts:

- Supervised learning: This approach only uses labelled data, meaning each data point already has a label or solution that the algorithm is trying to predict. Supervised learning is highly accurate but requires large amounts of labelled data, which can be costly and time-consuming to gather.

- Unsupervised learning: This approach works exclusively with unlabelled data, with the algorithm trying to find patterns or structures without any predefined labels. Unsupervised learning is useful when labelled data isn’t available, but it may not be as precise or accurate because it lacks external reference points.

- Semi-supervised learning: This method combines the two, using a small amount of labelled data to guide the model’s understanding of a larger set of unlabelled data. Semi-supervised techniques adapt a supervised algorithm, allowing it to incorporate unlabelled data as well, resulting in highly accurate predictions with relatively little labelling effort.

To help make these differences clearer, let’s take a look at an example. Imagine, you are a teacher. With supervised learning, your students’ learning would be closely monitored both in class and at home. Unsupervised learning would mean the students are entirely self-taught. With semi-supervised learning, you would teach concepts in class, then assign homework to your students to complete independently to reinforce the material.

In our article ‘What is generative AI?’, we explain what this popular type of AI is in detail.

How does semi-supervised learning work?

Semi-supervised learning involves multiple steps, and is typically carried out like this:

- Define objective or problem: First, it’s important to define the goals or purpose of the machine learning model. Here the focus should be on what improvements should be achieved through machine learning.

- Data labelling: Next, some of the unstructured data is labelled to give the learning algorithm a starting reference. For semi-supervised learning to be effective, the labelled data must be relevant to the model’s training. For example, if you’re training an image classifier to distinguish between cats and dogs, using images of cars and trains won’t help.

- Model training: Next, the labelled data is used to train the model on what its task is and the expected outcomes.

- Training with unlabelled data: Once trained on labelled data, the model is then given unlabelled data.

- Evaluation and model refinement: To ensure the model works correctly, it’s important to evaluate and adjust it as needed. This iterative training process continues until the algorithm reaches the desired level of accuracy.

What are the benefits of semi-supervised learning?

Semi-supervised learning is especially useful when there’s a large amount of unlabelled data and labeling all or most of it would be too expensive or time-consuming. This is important because training AI models often requires a lot of labelled data to provide necessary context. For a model to accurately distinguish two objects—like a chair and a table—it might need hundreds or even thousands of labelled images. In fields like genetic sequencing, labelling data requires specialised expertise.

With semi-supervised learning, it’s possible to achieve high accuracy with fewer labelled data points because the labelled data enhances the larger set of unlabelled data. The labelled data acts like a jumpstart, ideally speeding up learning and improving accuracy. This approach allows you to get the most out of a small set of labelled data while still being able to use a larger pool of unlabelled data, leading to increased cost efficiency.

Of course, semi-supervised learning has challenges and limitations. For example, if the initially labelled data has errors, this can lead to incorrect conclusions and reduce the quality of the model. Additionally, the model may become biased if the labelled and unlabelled data aren’t representative of the full range of data available.

What are popular use cases for semi-supervised learning?

Today, semi-supervised learning is used across a variety of fields, but one of its most common applications remains classification tasks. Below are some popular use cases for this method:

- Web content classification: Search engines like Google use semi-supervised learning to evaluate how relevant webpages are to specific search queries.

- Text and image classification: This involves categorising texts or images into predefined categories. Semi-supervised learning is ideal for this since there’s usually a lot of unlabelled data, making it costly and time-consuming to label everything.

- Speech analysis: Labelling audio files is often very time-consuming, so semi-supervised learning is a natural choice here.

- Protein sequence analysis: With the size and complexity of DNA strands, semi-supervised learning is highly effective for analysing protein sequences.

- Anomaly detection: Semi-supervised learning can help detect unusual patterns that deviate from an established norm.