Cloaking – SEO tactics against Google policy

Cloaking in SEO terms is used to describe when a server uses the same URL to show a search engine crawler one particular webpage version while at the same time displaying a different webpage to human visitors. This black hat SEO tactic is used as a deliberate and deceptive ploy, designed to increase a webpage’s ranking position. Google and other search engine providers consider this form of search engine optimisation to be a violation of regulations. But cloaking wasn’t actually a manipulative measure from the outset.

The origins of cloaking methods

Webpages with content consisting primarily of graphics, videos, or flash animation tend to do pretty poorly in search engine results pages. Multimedia content, which would often be well received by many users, can only be read in a very basic way by text-based search engines. In order to compensate for this deficit, website operators began cloaking. Instead of showing search engine crawlers the original site, they would display a description of the image and video content, creating a webpage in pure text form in the process. Search engines could handle content like this very easily and so would index the website accordingly. Unfortunately, this technique carries a high potential for abuse.

Cloaking as a deception tactic

Today, more and more website operators employ cloaking to present content to a search engine that bears no relevance to the content displayed to site visitors. To illustrate how this attempted manipulation takes place, we’ll demonstrate with the example of a fictitious casino webpage.

In order to increase visibility on the World Wide Web, a provider of an online casino could decide to display targeted content containing free-to-play board games to search engine crawlers, even though visitors that land on the website can only take part in pay-to-play gambling games. As a result of this false information, the search engine lists the online casino in the index for free-to-play board games and recommends the casino site as a search result for keywords that don’t actually match its true content. This annoys misguided visitors and reduces the user friendliness of the search engine.

In order to prevent tricks like these, search engine operators crack down hard on cloaking techniques. Market leader Google has its own specialised web spam team to combat this black hat SEO tactic. Any website operators considering implementing cloaking methods have to be aware that their project(s) will be removed from the search index completely as punishment when they’re caught.

Cloaking techniques

Website operators that rely on black hat SEO usually use two different methods in order to deceive search engines.

Agent name delivery

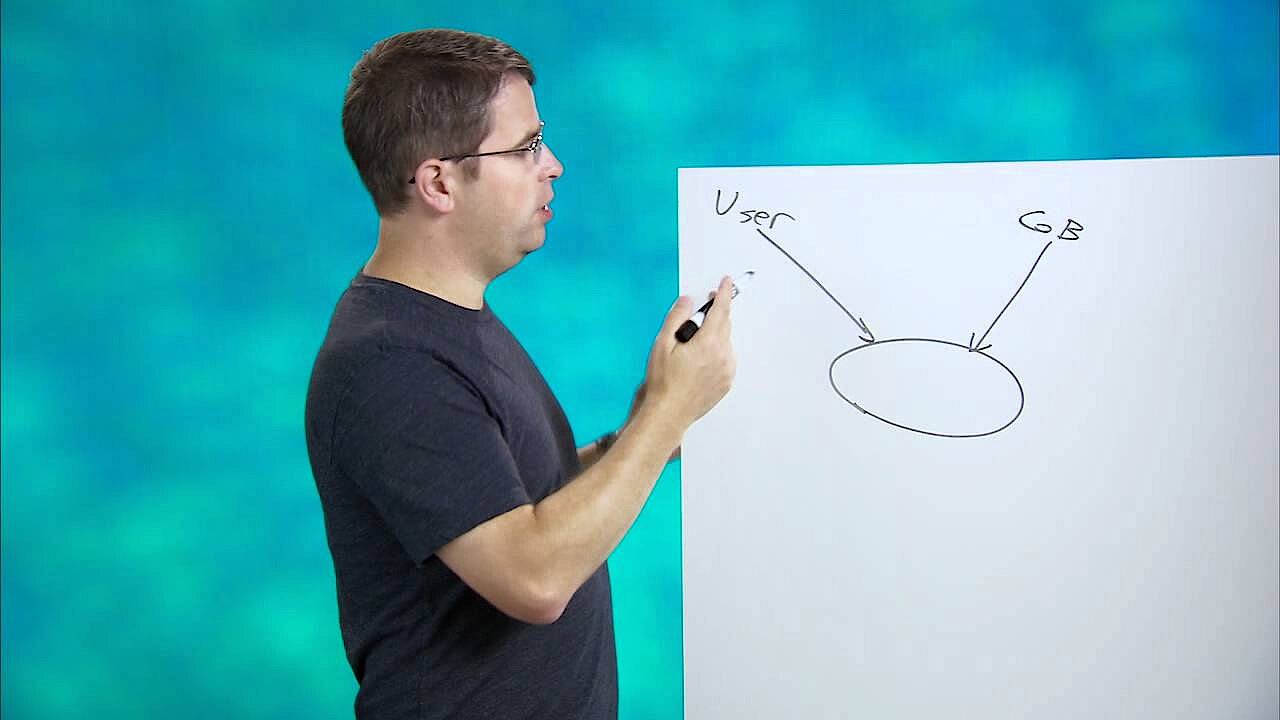

Typically, access to a webpage occurs via a user agent. Examples of user agents are client applications, like web browsers, or automated programs, such as search engine crawlers. These can be identified by a specific ID, known as an agent name. By optimising style sheets for every user agent, web servers can tailor content on the browser and in doing so increase the usability of a webpage. Agent name delivery forms the basis for a display optimised for the end user experience. But this activity becomes cloaking when website operators integrate specific mechanisms that recognise the agent names of search engine crawlers, like the Google Bot, and react by supplying separate content. To avoid these agent name delivery tactics, search engine providers sometimes disguise their web crawlers as ordinary browsers.

IP delivery

Besides the agent name, there’s one other way to categorise website visitors and supply them with specialised content: IP addresses. This method is used in the world of geotargeting to offer website visitors different language versions or regional offers. In cloaking, IP delivery is when a webpage operator delivers customised content to the standard IP address of a search engine crawler. But this black hat SEO is only successful when the corresponding search engine bot always uses the same IP address. To prevent this manipulation, most search engine providers now invest in changing IP addresses.

In an official video discussing the subject, Matt Cutts, the former head of Google’s web spam team, made clear that neither IP-based geotargeting, nor the use of IP addresses to read and react to mobile user agents would be considered cloaking, because these measures are taken to promote user friendliness. And Google isn’t bothered if a website visitor from France receives slightly different content to an American visitor on the grounds that the page is optimised for the language associated with the IP address. They’re looking for webpages that specifically treat the Googlebot differently to other website visitors.

Tips for website operators

The search engine market leader Google offers webpage operators two main tips to minimise the risk of unintentional cloaking:

- Webpage content should be provided in such a way that human site visitors and search engine crawlers receive the same content

- Any coding lines that search for the agent name or IP address of a web crawler specifically are suspicious and should be avoided

Creating a separate webpage version just for bots that contains content that’s difficult to crawl isn’t what Google wants. Instead, images, videos, and flash animation should be presented with a description in their corresponding meta tags.

If you want to check the way that the Googlebot views your webpage, you can do so by searching for your URL using the Google Search Console.