How to add and optimise robots.txt file for WordPress

Not every subpage or all directories on your website are important enough that they should be crawled by search engines. Using robots.txt you can determine which WordPress subpages should be taken into consideration by crawlers and which should not. This way you position your website more effectively in online searches. Read on to find out how robots.txt works in WordPress and how to optimise the file.

- Free website protection with SSL Wildcard included

- Free private registration for greater privacy

- Free 2 GB email account

What is robots.txt for WordPress?

So-called crawlers search the Internet for websites 24/7. These bots are sent out by search engines to browse as many pages and sub-pages as possible, indexing them to make them available for searching. In order for crawlers to read a website, they must be guided. Website owners can avoid indexing content that is irrelevant to search engines and ensure that the crawler only reads content it is supposed to find.

To exclude sections of your website, you can use robots.txt in WordPress and other CMS. In essence, the text determines which areas of your website are discoverable by crawlers and which are not. Since each domain has a limited ‘crawling budget’, it is all the more important to push your main pages and remove insignificant subpages from search volumes.

Your dream domain is just a few steps away! Register your desired domain with IONOS and benefit from excellent service, numerous security features, and a 2 GB email inbox.

What is robots.txt in WordPress used for?

Adding robots.txt to WordPress defines which content is indexed and which is not. For example, while your website should have a good ranking in searches, the same probably does not apply to the imprint of your site. Comments or archives do not provide any added value in a search and may even be bad for your rankings – namely when a search engine spots duplicate content. With a robots.txt file in WordPress you can exclude such cases and direct the different crawlers to the pages of your website that should be found.

Automated robots.txt file in WordPress

WordPress automatically creates a robots.txt file, laying some of the groundwork. However, the addition is not extensive and should be viewed as a starting point. The text looks like this:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/The ‘user-agent’ in the first line refers to the crawlers. The ‘*’ indicates that all search engines are allowed to send their bots to your page. This is generally recommended, since your website will be found more often this way. The command ‘Disallow’ blocks the following directories for the crawlers – in this case the administration and the directory of all files on WordPress. robots.txt blocks these for search engines, because they’re not relevant for your visitors. To ensure that they are accessible to you only you should protect them with a secure password.

As an administrator you can protect your WordPress login using the .htaccess file.

What goes into a robots.txt file in WordPress?

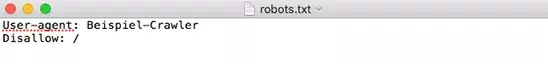

Search engines like Google should be able to find your website. However, rather harmful or shady services like DuggMirror should not; you can exclude them with robots.txt in WordPress. In addition, you should exclude the themes you’ve used, your imprint and other pages that have little or no relevance for indexing. Plugins should also not be indexed – not only because they are not relevant to the public, but for security reasons too. If a plugin faces a security risk, your website could be detected and damaged by attackers.

In most cases, the two commands mentioned above will be enough for you to make good use of robots.txt in WordPress: ‘User-agent’ determines which bots should be addressed. This way you can determine exceptions for certain search engines or set up basic rules. ‘Disallow’ prohibits crawlers from accessing a corresponding page or subpage. The third command ‘Allow’ is not relevant in most cases, because access is allowed by default. The command is only required when you want to block a page but unblock its subpage.

The fastest way to your website: WordPress Hosting from IONOS boasts several advantages. SSD, HTTP/2, and gzip are included and three free domains. Choose the package that suits you best!

Configure robots.txt in WordPress manually

To make individual adjustments you can extend robots.txt in WordPress. Simply follow the steps below:

Step 1: First, create an empty file called ‘robots.txt’ in any text editor.

Step 2: Then upload this to the root directory of your domain.

Step 3: Now you can either edit the file via SFTP or upload a new text file.

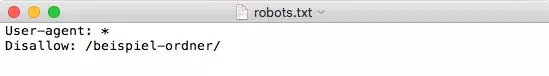

Using the commands above, you control which rules and exceptions apply to your website. To block access to a specific folder:

Plugins for creating a robots.txt file in WordPress

You can also create and change the robots.txt file in WordPress using a SEO plugin. The process uses the dashboard which makes it convenient and secure. A popular plugin for this purpose is Yoast SEO.

Step 1: First, install and activate the plugin.

Step 2: Enable the plugin to make advanced changes. To do this, head to ‘SEO’ > ‘Dashboard’ >, ‘Features’ and click ‘Enabled’ under the ‘Advanced settings pages’ item.

Step 3: Following activation, make changes in the dashboard under ‘SEO’ > ‘Tools’ > ‘File editor’. Here, you can create and edit a new robots.txt file in WordPress. The modifications will then be implemented directly.

How do you test changes?

Now that you have adjusted your site, set up rules and, at least in theory, blocked crawlers, how can you be sure that your changes have been made? The Google Search Console helps with that. Here, you will find the ‘robots.txt tester’ on the left side under ‘Crawl’. Enter your pages and subpages and then see whether they can be found or are blocked. A green ‘allowed’ at the bottom right means that the crawlers find the page and take it into account, a red ‘disallowed’ means that the page is not indexed.

Summary: robots.txt optimises and protects your website

The robots.txt file is simple as it is effective to define which areas of your WordPress site should be found and by whom. If you already use a SEO plugin like Yoast, it’s easiest to apply the changes. Otherwise, the file can be created and adjusted manually.

Explore more valuable WordPress tips in the IONOS Digital Guide. Avoid the most common WordPress errors, discover how you can make WordPress faster, and find out what WordPress Gutenberg is all about.